Azure customers globally reported major service failures late Wednesday – Microsoft said issues with its Azure Front Door service had triggered an incident that took it over eight hours to fully mitigate.

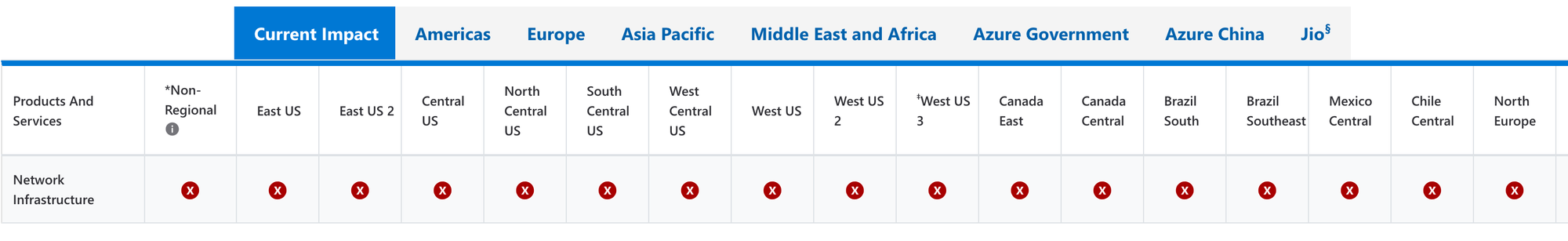

The Azure outage was flagged as "critical" across every global region on Azure's status dashboard and had a major knock-on affect on at least 20 Azure services including Azure Portal, IAM and container registry.

Azure Front Door (AFD) is Microsoft’s Content Delivery Network (CDN).

Microsoft separately attributed the incident to a DNS error.

Updated 8:50 BST October 30: Azure early Thursday blamed "an inadvertent tenant configuration change within AFD [that] triggered a widespread service disruption affecting both Microsoft services and customer applications dependent on AFD for global content delivery. The change introduced an invalid or inconsistent configuration state that caused a significant number of AFD nodes to fail to load properly, leading to increased latencies, timeouts, and connection errors for downstream services," it said.

We've identified a recent configuration change to a portion of Azure infrastructure which we believe is causing the impact. We're pursuing multiple remediation strategies, including moving traffic away from the impacted infrastructure and blocking the offending change.

— Microsoft 365 Status (@MSFT365Status) October 29, 2025

"Starting at approximately 16:00 UTC, we began experiencing DNS issues resulting in availability degradation of some services. Customers may experience issues accessing the Azure Portal. We have taken action that is expected to address the portal access issues here shortly," the Azure status page showed, .

"Users may be unable to access the Microsoft 365 admin center... admins are reporting issues when attempting to access some Microsoft Purview and Microsoft Intune functions. Users are also seeing issues with add-ins and network connectivity in Outlook" service alert (MO1181369) showed.

Azure outage blamed on configuration change

The issue appears to be that pesky known-known: a configuration change breaking things. Redmond later added: "As unhealthy nodes dropped out of the global pool, traffic distribution across healthy nodes became imbalanced, amplifying the impact and causing intermittent availability even for regions that were partially healthy. We immediately blocked all further configuration changes to prevent additional propagation of the faulty state and began deploying a ‘last known good’ configuration across the global fleet. Recovery required reloading configurations across a large number of nodes and rebalancing traffic gradually to avoid overload conditions as nodes returned to service. This deliberate, phased recovery was necessary to stabilize the system while restoring scale and ensuring no recurrence of the issue.

"The trigger was traced to a faulty tenant configuration deployment process. Our protection mechanisms, to validate and block any erroneous deployments, failed due to a software defect which allowed the deployment to bypass safety validations. Safeguards have since been reviewed and additional validation and rollback controls have been immediately implemented to prevent similar issues in the future."

The incident, however short-lived , will trigger further hand-wringing in many quarters over cloud concentration risk, after AWS's US-EAST-1 borkage triggered a smogasbord of commentary on architecting for resilience, lock-in, brain-drains, et al. Even some AWS customers appeared to be affected and scrambling to assess impact from the Azure outage.