A new DeepSeek model out this week represents a step towards a “next-generation architecture” the Chinese AI lab said, as it works on maximising inference performance in compute and memory-constrained environments.

The jury is still out on the merits of DeepSeek-V3.2-Exp, a model which dropped on Monday but it certainly does things differently – both architecturally and on pricing.

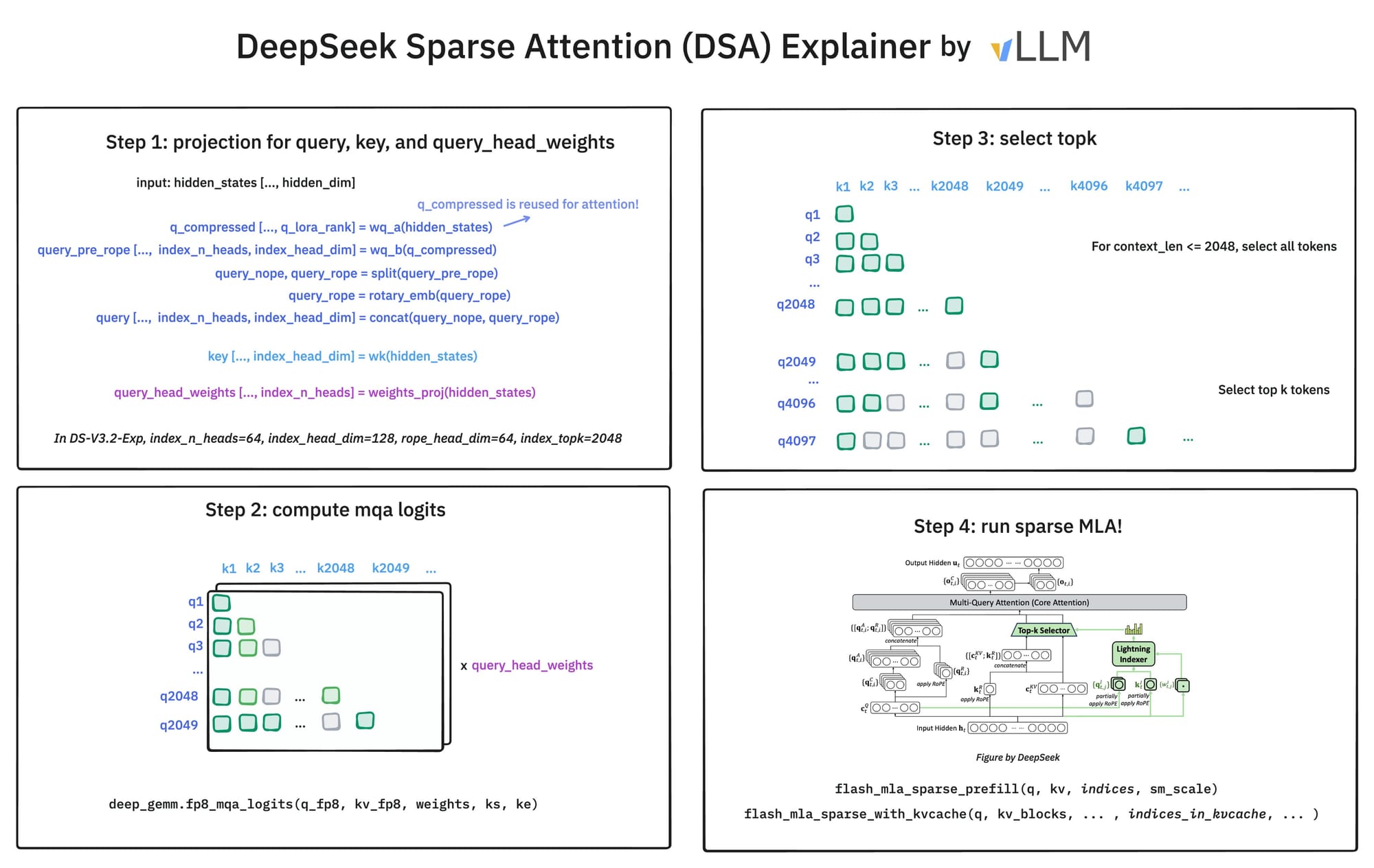

At its heart is a new “sparse attention” approach, which it calls the DeepSeek Sparse Attention (DSA). This comprises a “lightning indexer” and a token selection mechanism. Crudely, the former scores incoming queries and passes on only a selected subset of tokens; an approach to pruning and tuning queries that it said “requires much less computation.”

Sparse attention: An urgent need?

(DeepSeek’s founder Liang Wenfeng co-wrote a paper on sparse attention earlier this year, saying “the high complexity of vanilla Attention mechanisms emerges as a critical latency bottleneck as sequence length increases… attention computation with softmax architectures accounts for 70–80% of total latency when decoding 64k-length contexts, underscoring the urgent need for more efficient attention mechanisms.)

Data scientists, fight Wenfeng on that if so inclined.

One thing that is less arcane to the mere mortal: DeepSeek’s API pricing also changes the game. Perhaps learning from GPT-5 dramas, DeepSeek said it would keep API access for the model’s predecessor V3.1-Terminus going until at least October 15, for comparison testing. But regardless of which model you use, API pricing has been slashed, with the company's claim of halving prices seemingly actually understating things a bit.

Output tokens are now priced at $0.42 per million, a 75% drop, while cache-miss inputs are down from $0.56 to $0.28 per million. For cache-hit inputs, the price is down from 1/8th the non-cache price to a tenth.

Small-model pricing

Google's "smallest and most cost effective model, built for at scale usage", Gemini 2.5 Flash-Lite, is priced at $0.40 for a million output tokens.

In August, OpenAI moved GPT-5 pricing to match Gemini, at $10 per million output tokens. There has been an eerie similarity in pricing ever since – and now DeepSeek matches the lightweight models of both those companies; gpt-5-nano and gpt-4.1-nano also charge $0.40 per million output tokens, at standard priority.

Anthropic's cheapest offering, Claude Haiku 3, comes in at $1.25 per million output tokens, while xAI charges $0.50 for Grok 4 Fast.

"Although our internal evaluations show promising results of DeepSeek-V3.2-Exp, we are actively pursuing further large-scale testing in real-world scenarios to uncover potential limitations of the sparse attention architecture," DeepSeek said in a paper on DeepSeek-V3.2-Exp.

Sign up for The Stack

Interviews, insight, intelligence, and exclusive events for digital leaders.

No spam. Unsubscribe anytime.