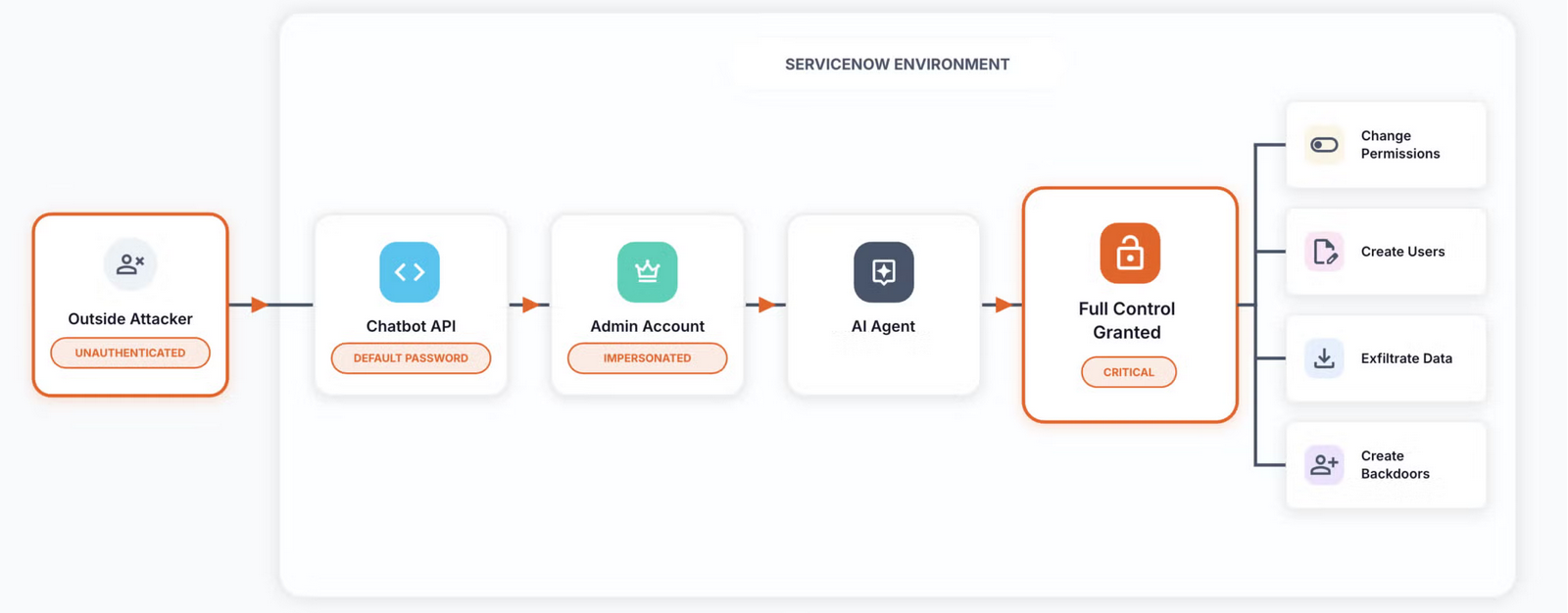

A series of critical security flaws in ServiceNow’s AI platform let any unauthenticated remote attacker create rogue agents with system administrator permissions – bypassing SSO and MFA with just an email.

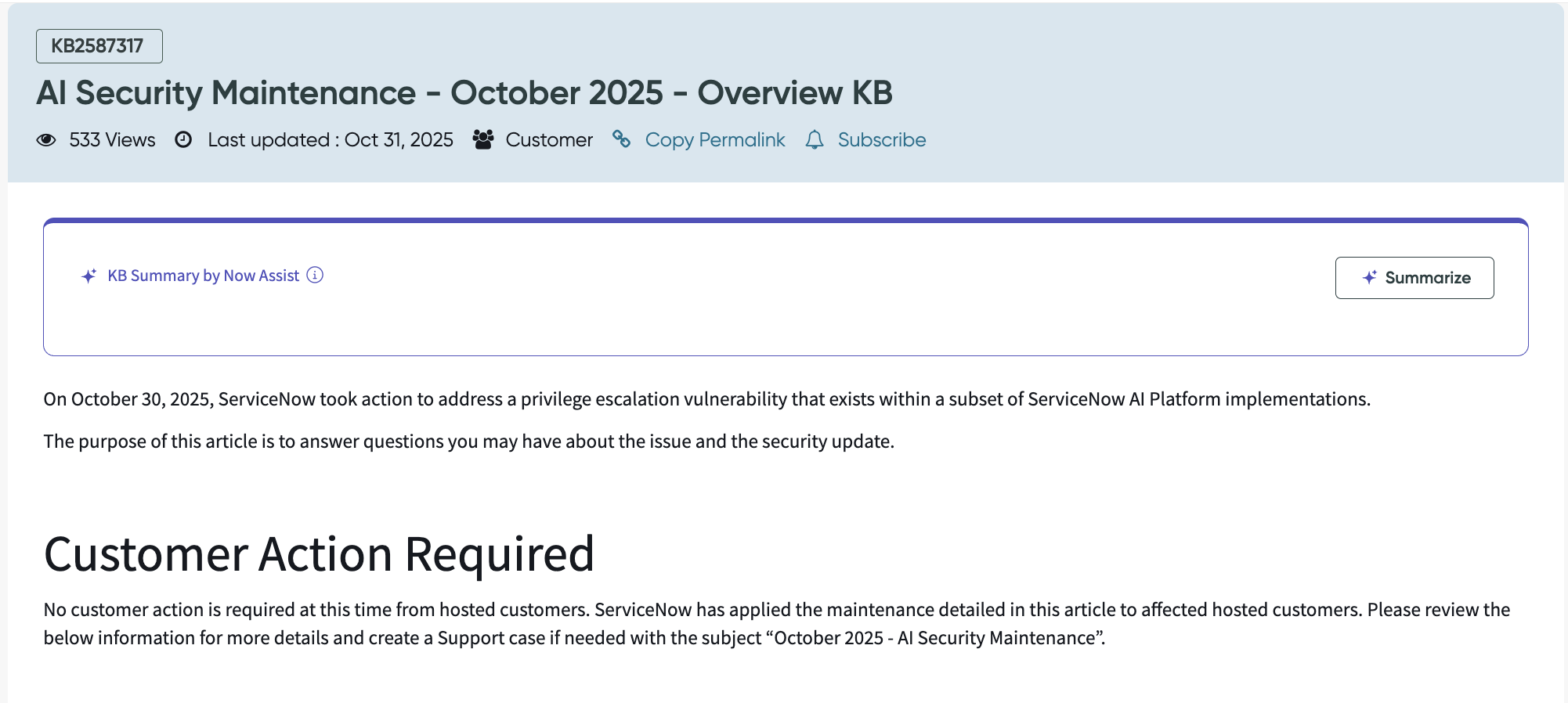

The vulnerability was quietly patched by ServiceNow in late October. The software company this week belatedly allocated CVE-2025-12420. The ServiceNow AI platform vulnerability stems from a trio of security failures.

1) The company shipped a default, hardcoded password (servicenowexternalagent) across all instances for its "AI Agent".

2) ServiceNow trusted anyone with this token. Its "Auto-Linking" feature meant a hacker could say, "I am admin@company.com," and the system would link the session to that user without requiring a password or MFA.

3) Once "logged in", an attacker could interact with a hidden AI topic (AIA-Agent Invoker AutoChat) to command a high-privilege AI agent to create a new backdoor account and grant it full admin rights.

ServiceNow AI platform vulnerability

The bug was discovered by AppOmni researcher Aaron Costello.

The SaaS provider patched the bug in ServiceNow’s Virtual Agent API, which connects an external bot such as Slack or Microsoft Teams with the ServiceNow Virtual Agent to access information on the company’s ServiceNow instance, a week after it was disclosed – but kept its short advisory behind a portal reserved only for its customers. (Screenshot below.)

ServiceNow said it has seen no evidence of exploitation in the wild.

“With only a target’s email address, the attacker can impersonate an administrator and execute an AI agent to override security controls and create backdoor accounts with full privileges,” Costello said in his report.

The vulnerability affected Now Assist AI Agents and the Virtual Agent API.

ServiceNow advised customers to upgrade to patched releases of the Now Assist AI Agents version 5.1.18 or later and 5.2.19 or later, and Virtual Agent API version 3.15.2 or later and 4.0.4 or later to resolve the vulnerability.

How the attack works

The flaw originated, Costello said, when ServiceNow expanded the capabilities of an old chatbot that lets users access their ServiceNow data from other platforms, recycling a credential that when used would give an attacker access to the relatively limited Virtual Agent chatbot.

However, Service Now also implemented a functionality whereby if someone communicated with the Virtual Agent chatbot using this token and with the email address of a Service Now customer from any organisation, they could communicate with the chatbot as that person.

See also: ServiceNow president falls on his sword over Army CIO concerns

Because ServiceNow also introduced the ability for this chatbot to run AI agents, an attacker could create and run AI agents within an organisation’s ServiceNow instance as an admin. Costello said for his proof of concept: “I was able to use a ServiceNow agent that is given to all ServiceNow customers as an example when they get AI. I could use that agent to create myself an admin account and therefore fully compromise the instance.”

Enterprise AI could be the most risky AI

Costello told The Stack when it comes to how much control many SaaS vendors like ServiceNow gave customers over these AI agents, it's limited.

“Your hands, for the most part, are quite tied."

“You really rely on the vendor to fix the issue for you,” he told The Stack.

Costello, who leads security research at SaaS security specialist AppOmni, added that the kind of companies that actually use these AI agents are major enterprises themselves. “And those are the exact kind of organisations that store everyone's data, right? The large pharmaceutical companies, the large banks, the technology providers,” he said.

Costello added: “A hacker doesn't necessarily need to compromise every organisation in the world (...), they really only need to compromise several of the biggest ones in order to have access to the majority of the data.”

What can tech leaders do?

Understanding what AI agents and tools are being used in a system and what they have access to or permissions for is imperative for security leaders, he told The Stack. The only defensive strategy to prevent an attack like this would ultimately be to keep “inventory of agents and what they can do” and routinely audit it to “ensure that there's nothing excessively powerful that the organisation just doesn't need,” Costello explained.

Costello warned that similar problems can resurrect themselves when customers develop their own agents to do similar functions and risk reintroducing this kind of vulnerability into their environment.

In that case, perhaps needless to say, “the vendor is not going to help you. The best the vendor can do is provide you documentation and educate their customers on the risks of doing things a certain way. But it's on the customer to implement those safeguards and take that security advice.”

Necessary delays?

As to why the CVE came months after Costello disclosed the vulnerability to ServiceNow (October 23) and it patched (October 30), the long window was to give on-prem users ample time to patch their own systems – at least that’s what ServiceNow told Costello when the CVE allocation appeared two days before Costello’s blog outlining the vulnerability was due to be published.

“AI is generally a black box. It has caused harm and it has done a lot of good,” Costello said, adding he predicts a single breach, or series of breaches, will prompt legislation or policy to limit how organisations can use AI, similar to the introduction of GDPR.

As to whether Costello used AI to discover the vulnerability, he said didn’t use any AI tools but does use them daily for work, admitting “I definitely used it to help me write the blog.”