AI

The Elon Musk-lead company is funding its data centre build out and product expansion for the business market.

The buzz is deafening. But GitHub bug reports flag warning signs over capacity buffers...

Steve Klabnik co-wrote the book on Rust. LLMs may now be good enough that he can write a better compiler in Rue, he thinks.

Here are predictions from technology leaders on the hottest topic of the season: AI.

A leak shows the rawest of OpenAI's margins is looking strong, as two Chinese competitors prepare for IPO but keep their numbers close to their chests.

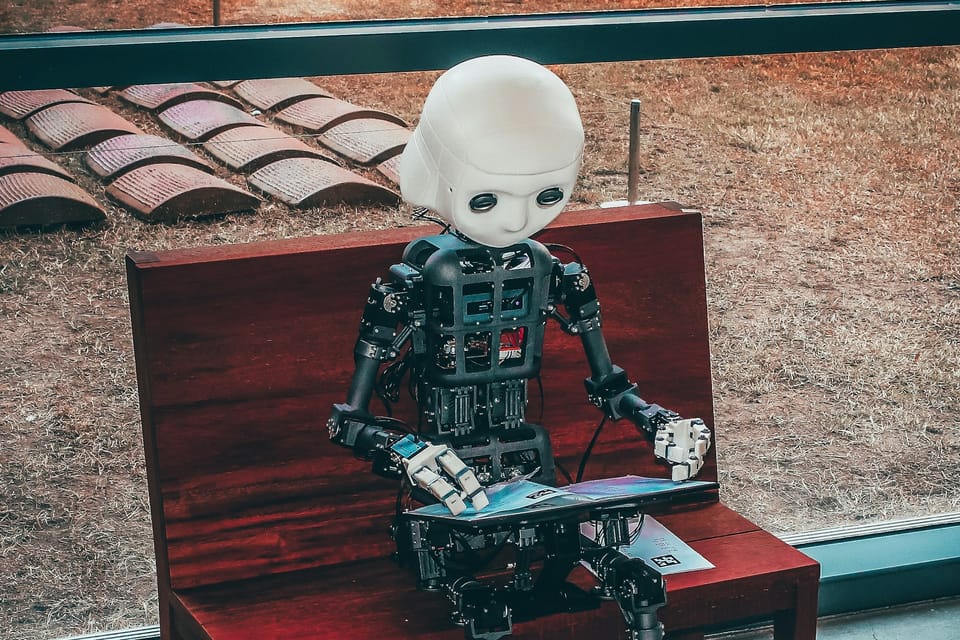

"If the AI bubble ever starts to deflate, footage of this exhibition floor would feature prominently in a Netflix documentary about it."

"Advanced AI" is now more than 10% of its new bookings, but that's the last time you'll see that number.