Cisco is expanding its silicon portfolio with its first AI networking chip, designed to boost energy efficiency in data centres.

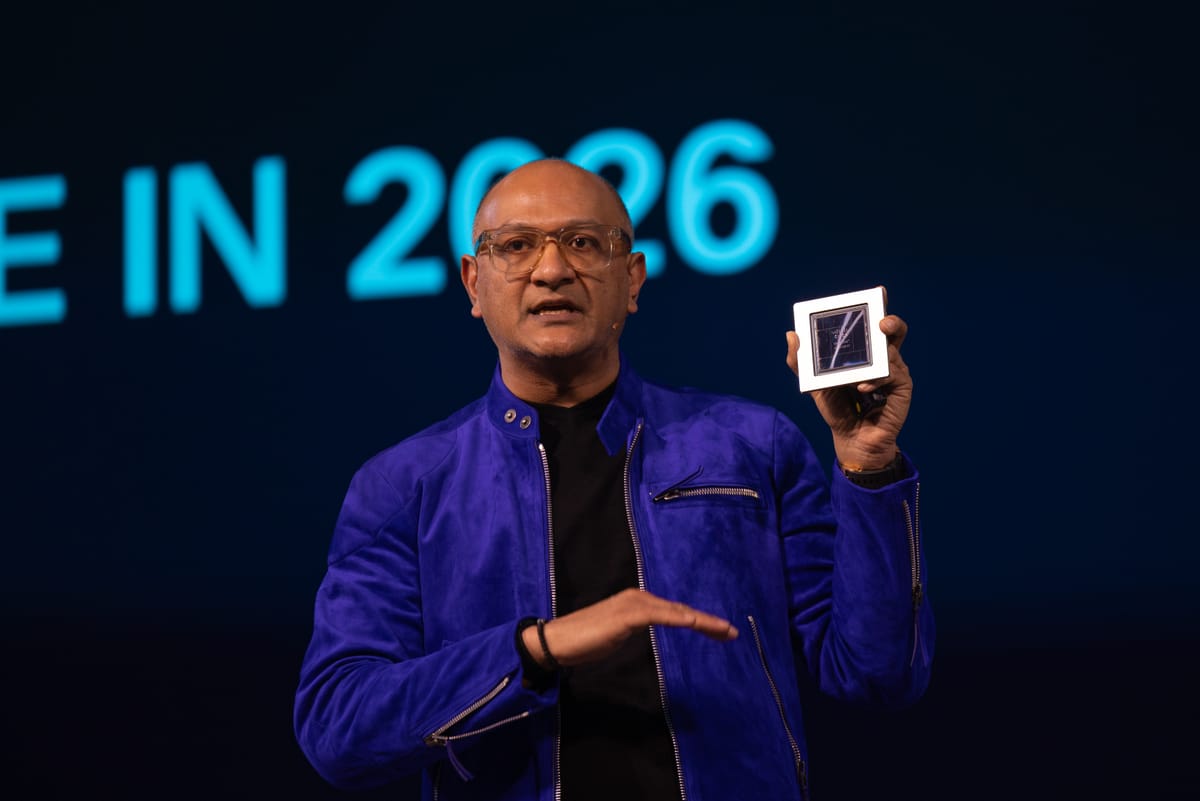

President and CPO Jeetu Patel revealed Cisco's Silicon One G300, a 102.4 Tbps switching chip to connect AI training and inference chips within data centres.

The Cisco exec said there would be a “whole new Cisco” geared towards AI networks during Tuesday's Cisco Live conference in Amsterdam, where The Stack was in attendance.

Cisco said it wants to stay friendly with NVIDIA as it overlaps with the AI chipmaker to get a slice of the $600 billion AI infrastructure market, where optimising networking between stacks of AI chips is a growing segment.

The programmable chip will be built on 3mm technology from TSMC and is set to be generally available later in 2026.

Competing with partners

The chip puts Cisco in direct competition with similar offerings from NVIDIA, with its Spectrum-X Ethernet chips, and Broadcom’s Tomahawk family. Patel said he still believes in partnering with competitors to support an "open ecosystem."

During a press briefing, he said: “My formula that I use is that anyone who has more than a 20% share - if you don't integrate with them, you're not actually doing anything else besides excluding yourself from that market.”

Adding, "We wouldn't be investing the time, money and effort with NVIDIA if we thought this was a one-year partnership, it wouldn't make any sense."

NVIDIA and Cisco partner on a number of products, including the Cisco Secure AI Factory. The full-stack AI architecture including servers, GPUs, storage, and software from both companies.

AI chips for specific needs

Hardware group EVP Martin Lund said vendor lock-in concerns and demand for “specialised compute functions” also kept Cisco positive about its position in the chip market going forward.

Responding to a question on the NVIDIA partnership, he said: “We’ve optimised our silicon for some very specific use cases based on our networking knowledge from the past 40 years, and our bet is that’s going to be important in a certain way.

"But this is a very large market and I think over time AI compute will become disaggregated and you'll have specialised compute functions for different [processes]. It's why networking has to be open, because you're going to have different accelerators running on it, you can't just have a vendor lock-in solution."

The G300 is one component of a “scale out” hardware launch for the networking company. The new chip powers N9000 and 8000 Ethernet systems for higher bandwidth, i.e. capacity for moving data, in data centres.

All elements are optimised for energy efficiency as AI users seek to limit the soaring energy costs of running AI agents. The a 800G Linear Pluggable Optics (LPO) device, and a 1.6T Octal Small Form-factor Pluggable (OSFP) Optics device also including in the launch claim to reduce power consumption by 50% compared to "retimed optical modules."