Google has released its much-awaited Gemini 3 large language model.

Gemini 3 is a sparse mixture-of-experts (MoE) transformer-based multimodal model, with a token context window of up to 1 million.

It was trained on Google’s custom TPU hardware and is being baked into Google Search immediately (the first time that Google has done this).

"AI Mode in Search now uses Gemini 3 to enable new generative UI experiences like immersive visual layouts and interactive tools and simulations, all generated completely on the fly based on your query." - Google

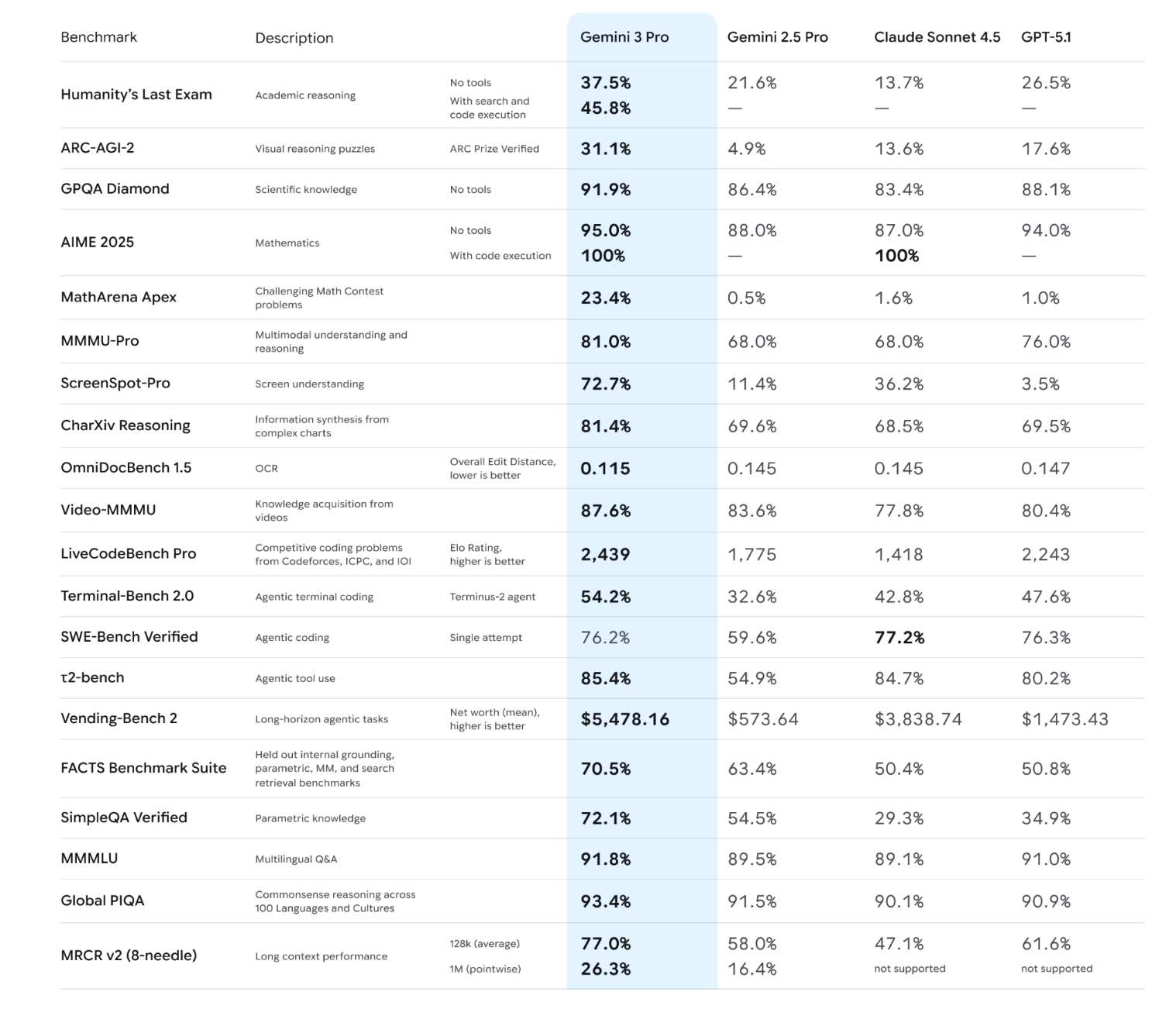

Google said Gemini 3 topped multiple leaderboards, and managed “an unprecedented 45.1% on ARC-AGI-2” (a challenging reasoning benchmark) “demonstrating its ability to solve novel challenges.”

Google also today released a platform called Google Antigravity that it wants to be “home base for software development in the era of agents.”

Gemini 3 performance

Gemini 3 tops the WebDev Arena leaderboard with 1487 Elo (higher than GPT-5 and Claude Opus 4.1), scores 54.2% on Terminal-Bench 2.0, which tests tool use ability to operate a computer via terminal and “greatly outperforms 2.5 Pro” on coding agent benchmark SWE-bench.

A partner “Gemini 3 Deep Think” model is still being safety tested.

Its release will follow within weeks, Google said.

Google making it Gemini 3 GA today for everyone in the Gemini app; Google AI Pro and Ultra subscribers in AI Mode in Search; developers in the Gemini API in AI Studio; Google Antigravity; and Gemini CLI; and for enterprises via its Vertex AI services platform and Gemini Enterprise.

Hype vs. hype check

Expect a lot of hype.

A swift way to let some air out of the balloon is to review Google’s own more detailed Gemini 3 Frontier Safety Framework Report, here.

That shows that for biology and chemistry “responses across all domains showed generally high levels of scientific accuracy but low levels of novelty relative to what is already available on the web…”

And when it comes to the “instrumental reasoning level one” CCL definition (Critical Capability Level is an AI safety framework benchmark), it “does not consistently improve on Gemini 2.5 Pro's scores. In many cases, it achieves a lower score,” the frontier safety paper notes.

Security:

When it comes to security, Google said “we mitigate against prompt injection attacks with a layered defense strategy,” that it spelled out in more detail in a June 2025 blog. That, it said today, “includes measures such as: prompt injection content classifiers, security through reinforcement, markdown sanitation and suspicious URL redaction, user confirmations, and end-user security mitigation notifications.”

Reaction to follow. Want to comment? Get in touch.

Sign up for The Stack

Interviews, insight, intelligence, and exclusive events for digital leaders.

No spam. Unsubscribe anytime.