NVIDIA is launching its own AI cloud service. The NVIDIA AI cloud offering will bring the full NVIDIA accelerated computing stack — from GPUs to systems to software – to customers hosted within the world’s hyperscale cloud providers, but offered directly by NVIDIA and accessible via the browser, the chipmaker said this week.

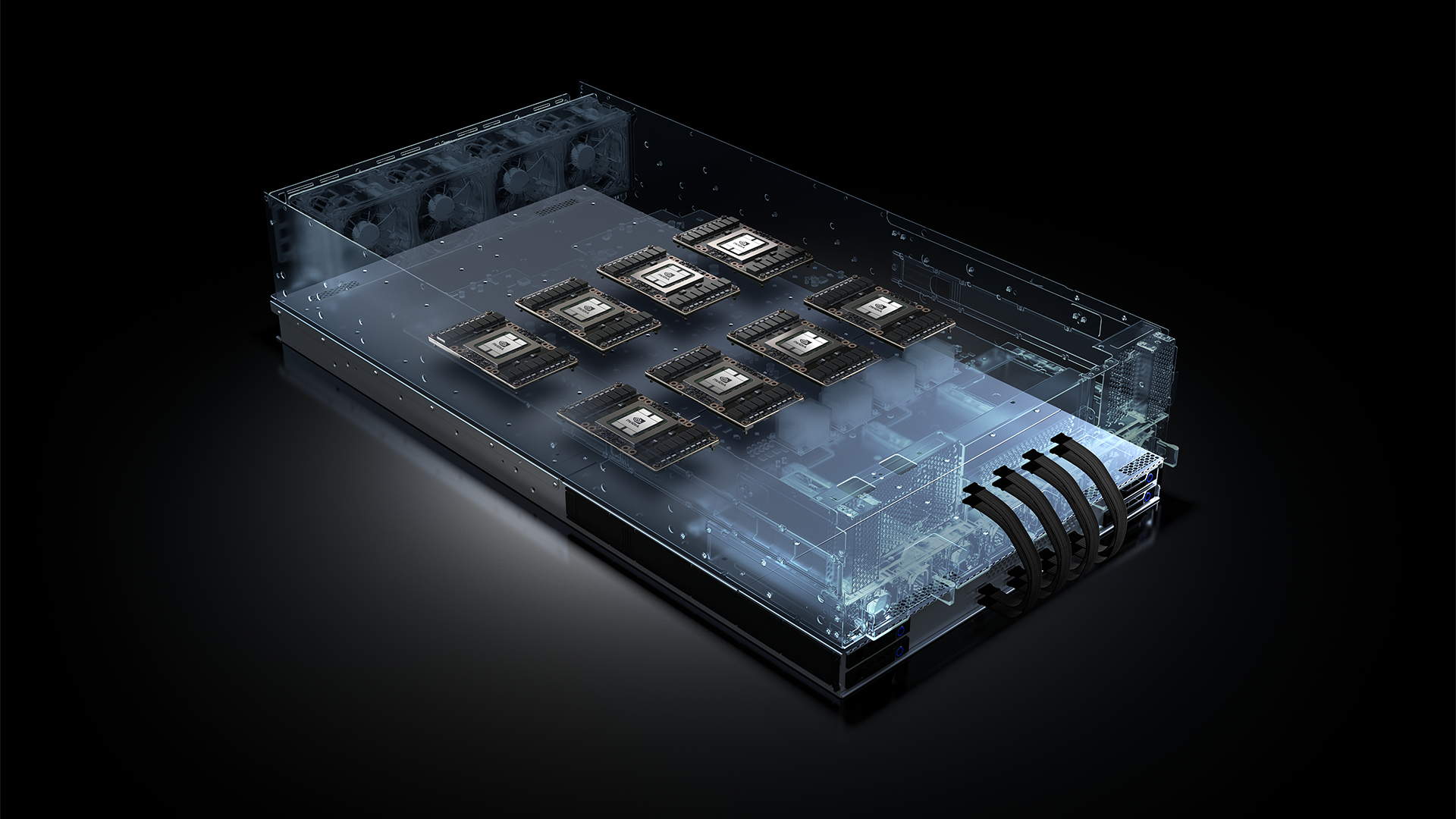

NVIDIA’s GPUs and associated software libraries have done much heavy lifting behind the scenes when it comes to popular AI. All GPT-3 models, for example, were trained on NVIDIA V100 GPUs. (The company recently started shipping its DGX H100 "purpose-built AI infrastructure", which comprises 8x H100 GPUs, 18x NVLink connections per GPU, 4x NVSwitches, 10x ConnectX network interface, bundled with Xeon CPUs and 30 Terabytes of SSD.)

NVIDIA CEO Jensen Huang has taken to describing NVIDIA as a “multi-domain accelerated computing platform” and wants to capture surging demand for AI training and inference capabilities by offering a cloud-hosted AI-as-a-Service, saying: “Generative AI's versatility and capability has triggered a sense of urgency at enterprises around the world to develop and deploy AI strategies. Yet, the AI supercomputer infrastructure, model algorithms, data processing, and training techniques remain an insurmountable obstacle for most.”

NVIDIA AI Cloud: Infrastructure to OS to models in one place

CEO Jensen Huang told analysts on a February 22, 2023 earnings call: “[We work with] 10,000 AI start-ups around the world, with enterprises in every industry. And all of those relationships today would really love to be able to deploy both into the cloud at least or into the cloud and on-prem and oftentimes multi-cloud.

“By having NVIDIA’s infrastructure full stack in their cloud, we're effectively attracting customers to the CSPs… for the customers, they now have an instantaneous infrastructure… a team of people who are extremely good from the infrastructure to the acceleration software, the NVIDIA AI open operating system, all the way up to AI models, within one entity, they have access to expertise across that entire span. This is a great model for customers. It's a great model for CSPs. And it's a great model for us. It lets us really run like the wind.”

NVIDIA DGX Cloud builds on initial Oracle partnership

The offering builds on a partnership with Oracle Cloud Infrastructure that NVIDIA announced in October 2022.

NVIDIA is calling the offering the NVIDIA DGX Cloud and said in a release that “using their browser, they will be able to engage an NVIDIA DGX AI supercomputer through the NVIDIA DGX Cloud, which is already offered on Oracle Cloud Infrastructure, with Microsoft Azure, Google Cloud Platform and others expected soon.

The company added in the February 22 press release: “At the AI platform software layer, they will be able to access NVIDIA AI Enterprise for training and deploying large language models or other AI workloads. And at the AI-model-as-a-service layer, NVIDIA will offer its NeMo and BioNeMo customizable AI models to enterprise customers who want to build proprietary generative AI models and services for their businesses.”

Huang concluded: "New companies, new applications and new solutions to long-standing challenges are being invented at an astounding rate. Enterprises in just about every industry are activating to apply generative AI to reimagine their products and businesses. The level of activity around AI, which was already high, has accelerated significantly. This is the moment we've been working toward for over a decade. And we are ready."

That's a lot of airtime The Stack has given NVIDIA here and we understand some potential customers may have many questions around everything from availability to cost to whether AWS is going to be part of this launch. We don't have answers yet but we will: The company plans to reveal more details about the NVIDIA AI Cloud at its GTC developer conference, taking place virtually March 20-23 and we'll tackle this offering more robustly then.