Apple says that scanning photos in the cloud and on device for child abuse images could result in a “dystopian dragnet” – explaining in more detail why it killed off controversial plans for "hybrid client-server approach."

Apple in August 2021 had announced plans to search for encrypted child sexual abuse material (CSAM) – using cryptographic hashes of abuse images, then trawling customers' devices and iCloud for matches.

As concern at the privacy implications of this kind of mass photo scanning mounted, it then pressed pause on them – to the frustration of child safety campaigners and the relief of digital safety and rights activists.

As first reported by Wired, Apple’s Erik Neuenschwander, director of user privacy and child safety, has now written to a new child sexual abuse material (CSAM) campaign group called Heat Initiative, explaining in more detail why Apple chose not to proceed with such scanning.

"A slippery slope of unintended consequences..."

His letter comes as governments, including the UK’s, ramp up pressure on technology companies to build backdoors into their encryption.

The UK’s Online Safety Bill demands that “regulated user-to-user service must operate the service using systems and processes which secure (so far as possible) that the provider reports all detected and unreported UK-linked CSEA [Child Sexual Exploitation and Abuse] content present on the service to the NCA [National Crime Agency],” for example.

The bill is in its final stages in Parliament and if enacted in its current form could see numerous secure messaging services quit the UK.

Apple photo scanning: "Imperiling..."

Neuenschwander (in the most detailed public exposition of the company's view thus far) said that the initial Apple photo scanning approach had been deemed impossibly risky for users after further discussions.

“After having consulted extensively with child safety advocates, human rights organizations, privacy and security technologists, and academics, and having considered scanning technology from virtually every angle, we concluded it was not practically possible to implement without ultimately imperiling the security and privacy of our users,” Neuenschwander wrote.

“... scanning every user’s privately stored iCloud content would in our estimation pose serious unintended consequences for our users… Scanning every user’s privately stored iCloud data would create new threat vectors for data thieves to find and exploit. It would also inject the potential for a slippery slope of unintended consequences.

“Scanning for one type of content, for instance, opens the door for bulk surveillance and could create a desire to search other encrypted messaging systems across content types (such as images, videos, text, or audio) and content categories. How can users be assured that a tool for one type of surveillance has not been reconfigured to surveil for other content such as political activity or religious persecution? Tools of mass surveillance have widespread negative implications for freedom of speech and, by extension, democracy as a whole," wrote Apple's privacy chief.

"Also, designing this technology for one government could require applications for other countries across new data types. Scanning systems are also not foolproof and there is documented evidence from other platforms that innocent parties have been swept into dystopian dragnets that have made them victims when they have done nothing more than share perfectly normal and appropriate pictures of their babies” he concluded.

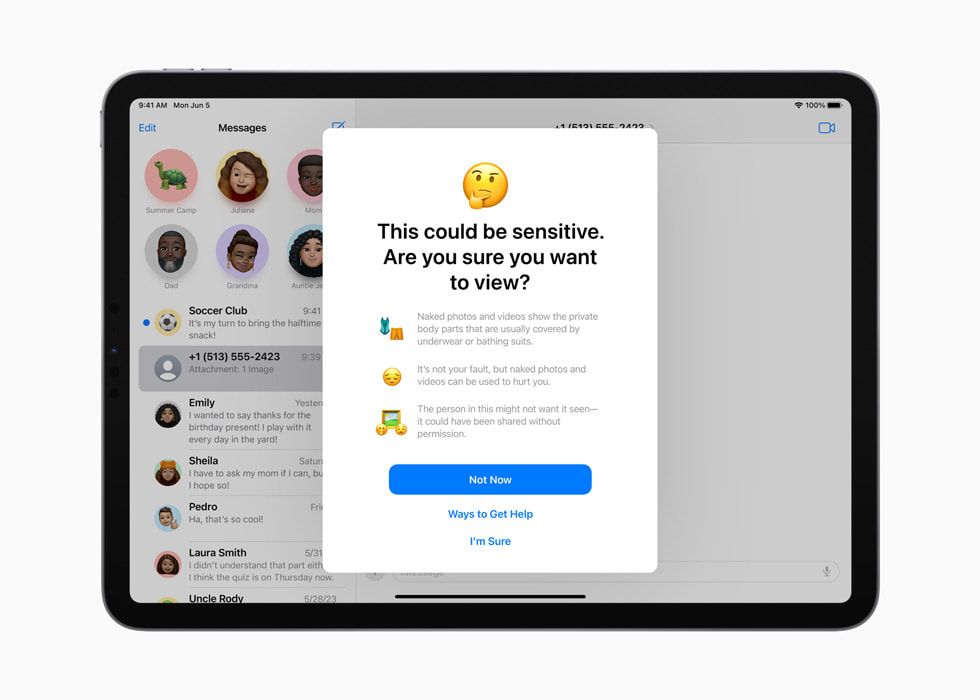

Apple is, instead, using "Communication Safety" tools it released this summer designed to warn children when receiving or sending photos in Messages that contain nudity, and which now cover video content in addition to still images – whilst a new API lets developers integrate Communication Safety into their apps. All processing, it says, occurs on device "meaning neither Apple nor any third party gets access to the content."

The API, when the framework flags user-provided media as sensitive, lets developers intervene by "adjusting the user interface in a way that explains the situation and gives the user options to proceed [and] avoid displaying flagged content until the user makes a decision."