There are over 100 million Intel Xeon chips installed in the market. Small wonder that Intel described its much-delayed new “Sapphire Rapids” generation of the processors -- which introduce a brand-new microarchitecture to the data center -- as “one of the most important product launches in company history”, unveiling nearly 50 new CPUs and a new data centre GPU line code-named Ponte Vecchio on Tuesday January 10.

The new 4th Gen Intel Xeon chips achieve a 55% lower and 52% lower total cost of ownership (TCO) for AI and database workloads respectively, versus 3rd Gen Intel Xeon Scalable 8380 Processors, the company claimed, emphasising the expected more efficient CPU utilization, lower electricity consumption and higher ROI. (You can read the small print on precise processor performance claims and benchmarks for the new line here.)

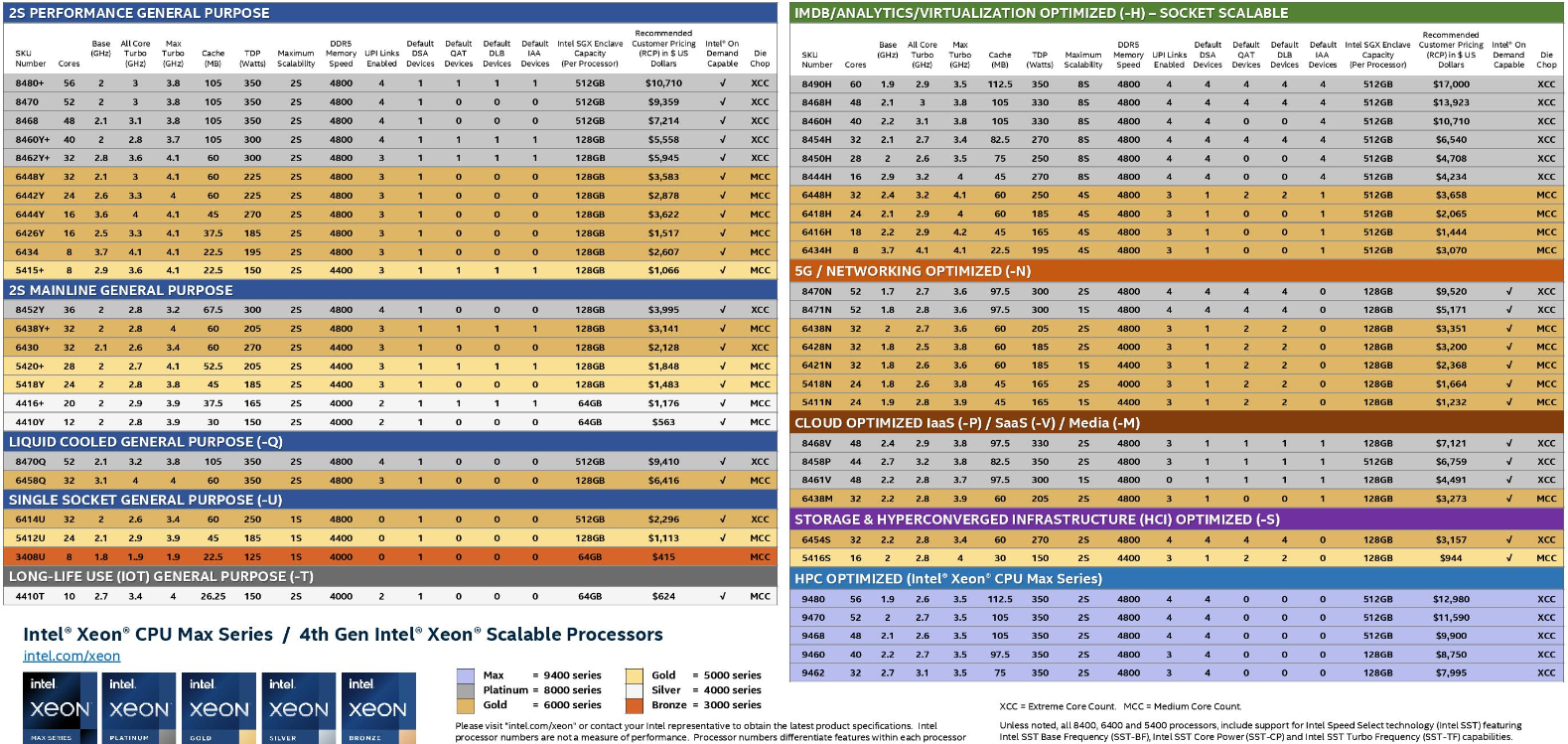

The Sapphire Rapids line features “nearly 50 targeted SKUs [from] mainstream general-purpose SKUs to purpose-built SKUs for cloud, database and analytics, networking, storage, and single-socket edge use cases” said Intel on January 10 (an SKU is basically a product type; i.e. you can choose nearly 50 different new chips.)

These include the Intel Max series which feature up to 56 performance cores, 64 GB of high bandwidth in-package memory, as well as PCI Express 5.0, DDR5 memory and are architected to "unlock performance and speed discoveries in data-intensive workloads" -- Intel claims the Max series provides up to 3.7 times better performance on a range of real-world applications like energy and earth systems modeling.

Intel 4th Gen Xeon chips accelerators

The 4th Gen Xeon chips feature a wide range of different built-in accelerators.

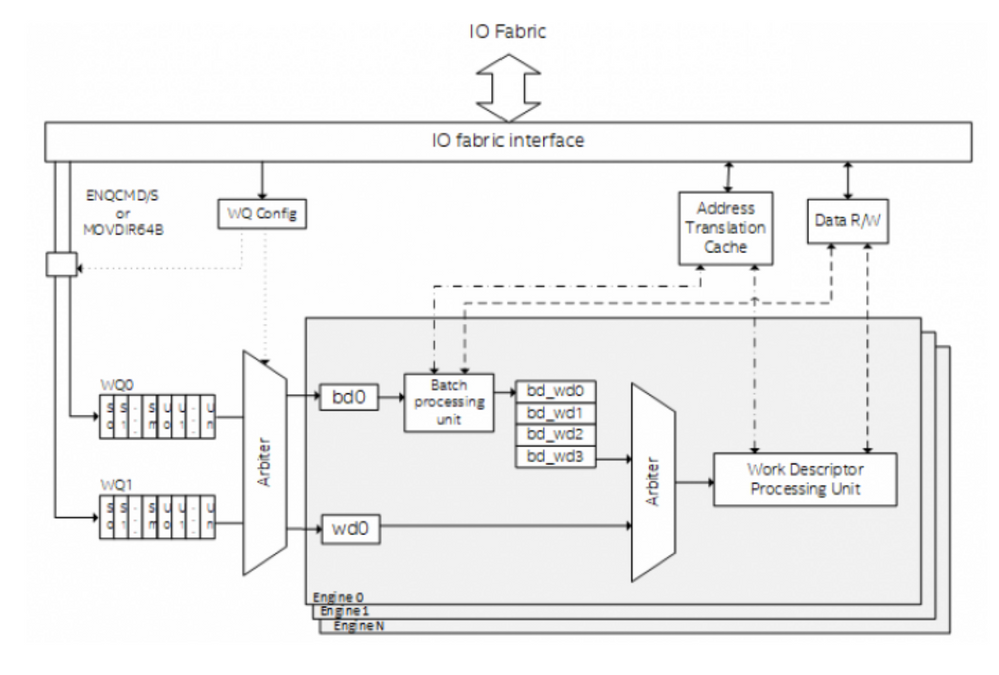

That gives customers the ability using software to dynamically adjust their performance for different workloads. e.g. The Data Streaming Accelerator or "DSA" (illustrated below) is designed to offload common data movement and data transformation tasks that cause overhead in data centre scale deployments.

It can "move and process data across different memory tiers (e.g., DRAM or CXL memory), CPU caches and peer devices (e.g., storage and network devices or devices connected via non-transparent bridge (NTB)," Intel said.

The Data Direct I/O Technology or "DDIO" accelerator meanwhile lets users "eliminate frequent visits to main memory [to] help reduce power consumption, provide greater I/O bandwidth scalability and reduce latency.

The Dynamic Load Balance or "DLB" accelerator "enables the efficient distribution of network processing across multiple CPU cores/threads and dynamically distributes network data across multiple CPU cores for processing as the system load varies" added Intel in a product sheet. (You can see the list of accelerators here).

Intel emphasised that the series is "unified by oneAPI for a common, open, standards-based programming model that unleashes productivity and performance" (don't forget to patch that oneAPI EOP vulnerability...)

It largely avoided direct contrasts with AMD chips but has made some available to the press for unfettered testing: Outlets like Tom’s Hardware are conducting benchmarking and will publish detailed reviews in coming days. Notably the 4th Gen Intel Xeon series is Intel On Demand-ready, Intel also emphasised.

Enter Intel On Demand: A new pay-as-you-go model

What is Intel On Demand? In short, a way of remotely turning on/off new capabilities or special purpose accelerators; a move that will let the semiconductor firm make more money from software licensing.

Whilst this may appear to the cynical like an unnerving way of holding customers over a barrel to properly unleash their hardware, Intel says that it is all in aid of a shift from capex to opex that will “give end customers the flexibility to choose fully featured premium SKUs or the opportunity to add features at any time throughout the lifecycle of the Xeon processor”, adding that this flexibility will “become more valuable to customers as the number of workloads and the amount of third-party enterprise software using accelerators grows.”

Follow The Stack on LinkedIn today

Supported Intel On-Demand features include Intel’s Dynamic Load Balancer, Data Streaming Accelerator, In-Memory Analytics Accelerator, Quick Assist Technology, Software Guard Extensions the company said.

In short, the new Intel pay-as-you-go model will let customers hit “boost” on regions of the chip to improve performance across a chosen area like compression, encryption, data movement, or data analytics.

In future there are clear reasons why they may want to do this. The chips' built-in Advanced Matrix Extension accelerators for example offer up to a clamed 10x higher PyTorch real-time inference and training performance: Fantastic stuff, but not something you might want running full-throttle and sucking up electricity all of the time...

The initial partners supporting Intel On-Demand are H3C, Inspur, Lenovo, Supermicro and Variscale.

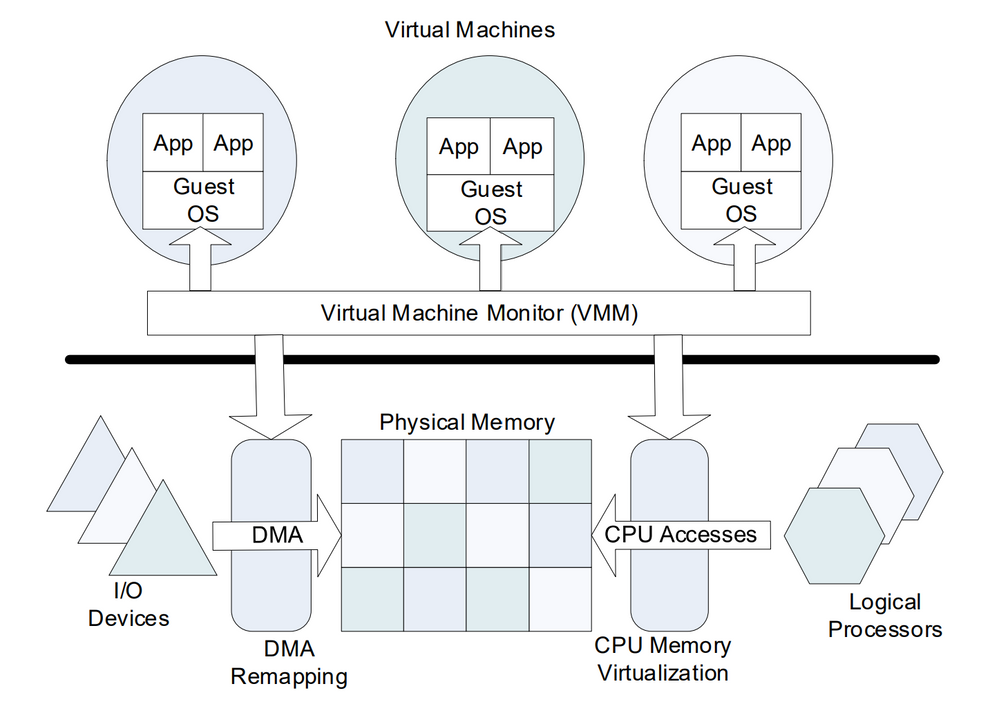

Those wanting to get into the weeds about some of the other new innovations in the 4th Gen Xeon line could do worse than read Intel Labs' contributions, where the team emphasise work done on Virtualisation technology, including new address translation services (ATS), process address space ID (PASID) and S-IOV support that allow CPUs and XPUs or accelerators to collaborate more efficiently -- as well as something called "Nested-IOVA" which enables isolation within-VM "as opposed to the inter-VM isolation previously offered" or "on-the-fly page-table switch" for kernel soft reboot (KSR) usage that allows software to provide a new set of page-tables while the input-output memory management unit (IOMMU) actively performs address translation.

Just want to go shopping? Here's the partner news:

- Lanner Introduces the Next-gen Network Security Appliances and Compute Blades powered by 4th Gen Intel Xeon Scalable Processor

- iSIZE BitClear Achieves AI-Driven Live Video Denoising and Upscaling up to 4K with Intel Advanced Matrix Extensions

- Lenovo Unveils Next Generation of Intel-Based Smart Infrastructure Solutions to Accelerate IT Modernization

- Azure Confidential Computing on 4th Gen Intel Xeon Scalable Processors with Intel TDX

- Cisco Unveils Its Most Powerful and Energy-Efficient Computing Systems

- ASUS Expands Data Center and Liquid Cooling Server Solutions With 4th Gen Intel Xeon Scalable Processors

- Supermicro Unleashes New Better, Faster, and Greener X13 Server Portfolio Featuring 4th Gen Intel Xeon Scalable Processors

- The Greenest Generation: NVIDIA, Intel and Partners Supercharge AI Computing Efficiency

- Giga Computing Announces Its GIGABYTE Server Portfolio for the 4th Gen Intel Xeon Scalable Processor

- TYAN Refines Server Performance with 4th Gen Intel Xeon Scalable Processors