Intel is delaying production of its "highest performance data center processor" to date. Dubbed "Sapphire Rapids", the high-powered Xeon server chip line will hit production in the first quarter of 2022, rather than by the end of this year as promised, Intel announced June 29, sending shares down and rival AMD's up.

The announcement comes just eight weeks after CEO Pat Gelsinger said the new chip was "scheduled to reach production around the end of this year" in Intel's Q1 (April 30) earnings call.

Corporate VP Lisa Spelman said in a blog: "Given the breadth of enhancements in Sapphire Rapids, we are incorporating additional validation time prior to the production release, which will streamline the deployment process for our customers and partners", citing plans to include major new features to the chip for the delay.

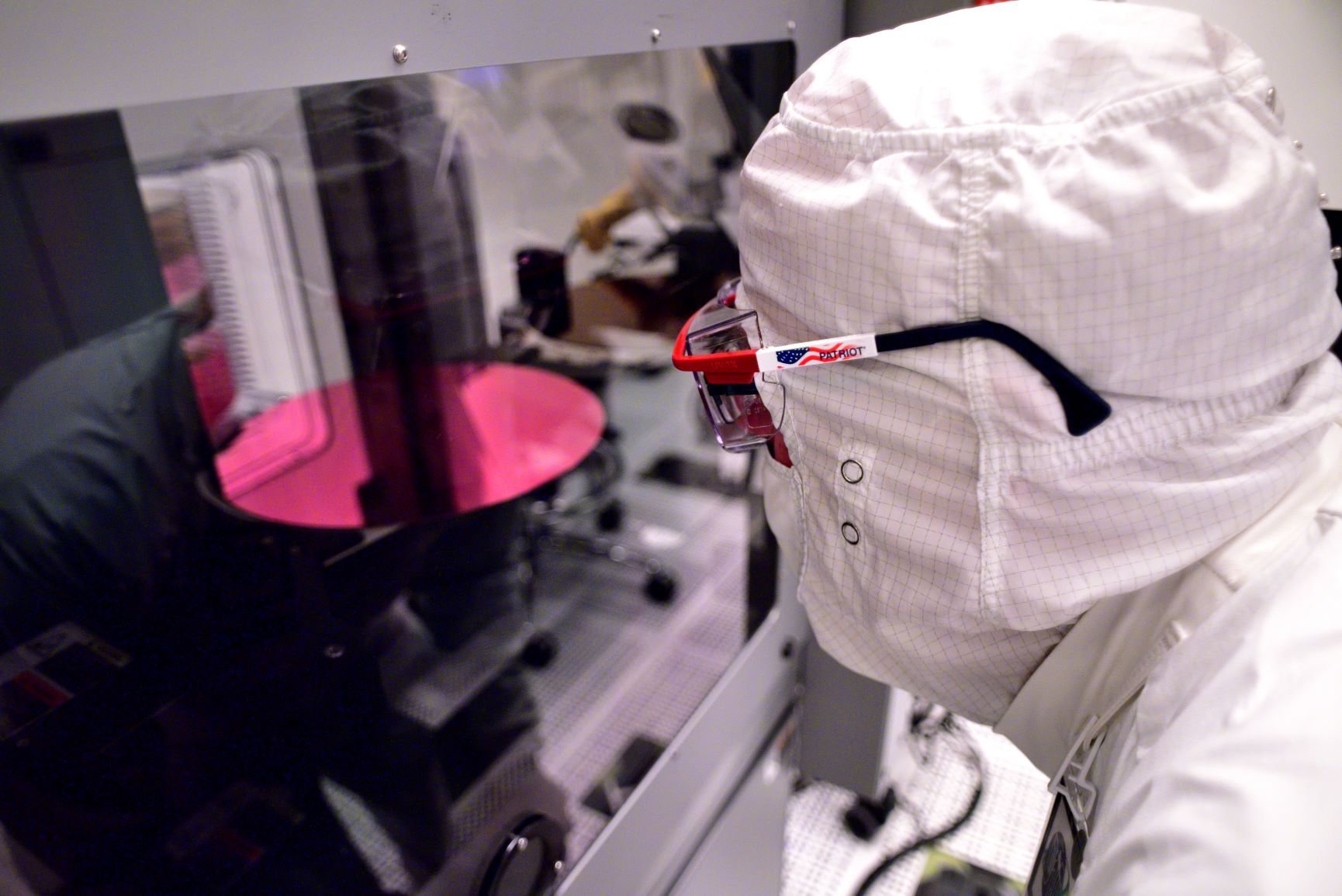

"Additional validation time" sounds a lot like "it doesn't work properly yet and we need to fix that", but more generous critics say the chip represents a major shift for Intel -- from a single die to a tiled architecture -- and features some significant changes in design including a PCI express 5.0 interface, DDR5 memory, and Compute Express Link (CXL) 1.1 support. Better, they say, that these are all working smoothly.

Sapphire Rapids delays: Had CEO hinted at hiccups?

Gelsinger in April had described the new CXL capabilities as "most seminally" important, saying "that additional interface on the platform has gotten tremendous support from the industry, new capabilities, co-processor capabilities, memory pooling capabilities. It really has been maybe the most exciting new platform capability since the PCI gen when we introduced that quite a number of years ago."

Hinting at complications [our emphasis], he added at the time: "Particularly for cloud customers, who have seen their memory portion of their TCO costs rise quite rapidly, there's a lot of enthusiasm in that area of the platform. So I'd say, overall, yes, we would have probably liked to have less platform transitions than this has induced, but so far, the response from customers is they're going through those transitions with us."

See also: Daljit Rehal, CDIO, HMRC, on Raspberry Pis, unstructured data, and leadership

Gelsinger added on the Q1 earnings call: "As we look further out in time, having more platform stability in the architecture, is something we're striving for. And I think as we lay out the '23, '24, '25 road map directions to the industry, you'll start to see that theme much more seminally, right, centered in the road map. Also, I think as we get all aspects of our core leadership, our vector AI leadership, process leadership, all of those start to come into play, I think we'll see a very nice view of how that platform architecture lays out over time."

The Intel Sapphire Rapids delay comes a week after CEO Pat Gelsinger announced an aggressive executive shakeup.

Navin Shenoy, GM of the data platforms group is leaving; Sandra Rivera was appointed EVP/GM of datacenter and AI; Nick McKeown as SVP/GM of a new network and edge group; Gelsinger also set up two new business units focused on software, and HPC and graphics. These will be led by Greg Lavender and Raja Koduri.)

Sapphire Rapids delay

In her blog, Intel's Lisa Spelman pointed to the need to make sure major new features are working seamlessly from the word go.

Among them, "a set of matrix multiplication instructions that will significantly advance DL inference and training", she said, adding in a June 29 blog post: "I’ve seen this technology firsthand in our labs and I can't wait to get it in our customers’ hands. I don’t want to give away everything now, but I can tell you that on early silicon we are easily achieving over two times the deep learning inference and training performance compared with our current Xeon Scalable generation."

Spelman added: "The other major acceleration engine we’re building into Sapphire Rapids is the Intel Data Streaming Accelerator. It was developed hands-on with our partners and customers who are constantly looking for ways to free up processor cores to achieve higher overall performance. DSA is a high-performance engine targeted for optimizing streaming data movement and transformation operations common in high-performance storage, networking and data processing-intensive applications. We’re actively working to build an ecosystem around this new feature so customers can easily take advantage of its value."

The Intel Sapphire Rapids delay comes a day after Intel confirmed the chips would be shipping with integrated High Bandwidth Memory (HBM), for memory bandwidth-sensitive workloads. This essentially stacks DRAM dies on top each other to boost the memory bandwidth in systems -- which remains a critical bottleneck to improve the performance of computing systems.