Artificial Intelligence will need to come out of the cloud and to enterprises' own infrastructure or the edge, NVIDIA CEO Jensen Huang emphasised on an earnings call late Wednesday, on the grounds that "the vast majority of the world has... data sovereignty issues or data rate issues that can't move to the cloud easily."

Huang was speaking after chipmaker NVIDIA's data center revenues topped $2 billion for the first time ever, rising 79% year-on-year as the hyperscalers snapped up GPUs for their data centres to power the deep neural networks underpinning increasingly popular Artificial Intelligence services in the cloud.

Net income for what NVIDIA reports as Q1 2022 climbed 31% meanwhile to $1.9 billion on overall quarterly revenues of $5.66 billion; another record. The results come after NVIDIA hosted its largest-ever GPU Technology Conference: its opening keynote drew 14 million views, with over 200,000 registrations from 195 countries.

AI at the edge...

Pointing to NVIDIA's EGX enterprise AI platform (which can run on VMware vSphere and which is also compatible with Red Hat OpenShift), Huang said: "We're democratising AI, we'll bring it out of the cloud, we'll bring it to enterprises and we'll bring it out to the edge... [AI] has to be integrated into classical enterprise systems."

In a typically loquacious earnings call, Huang also waxed lyrical about the opportunities for NVIDIA's Bluefield: programmable Smart Network Interface Controller (NIC) technology it gained via the acquisition of Mellanox.

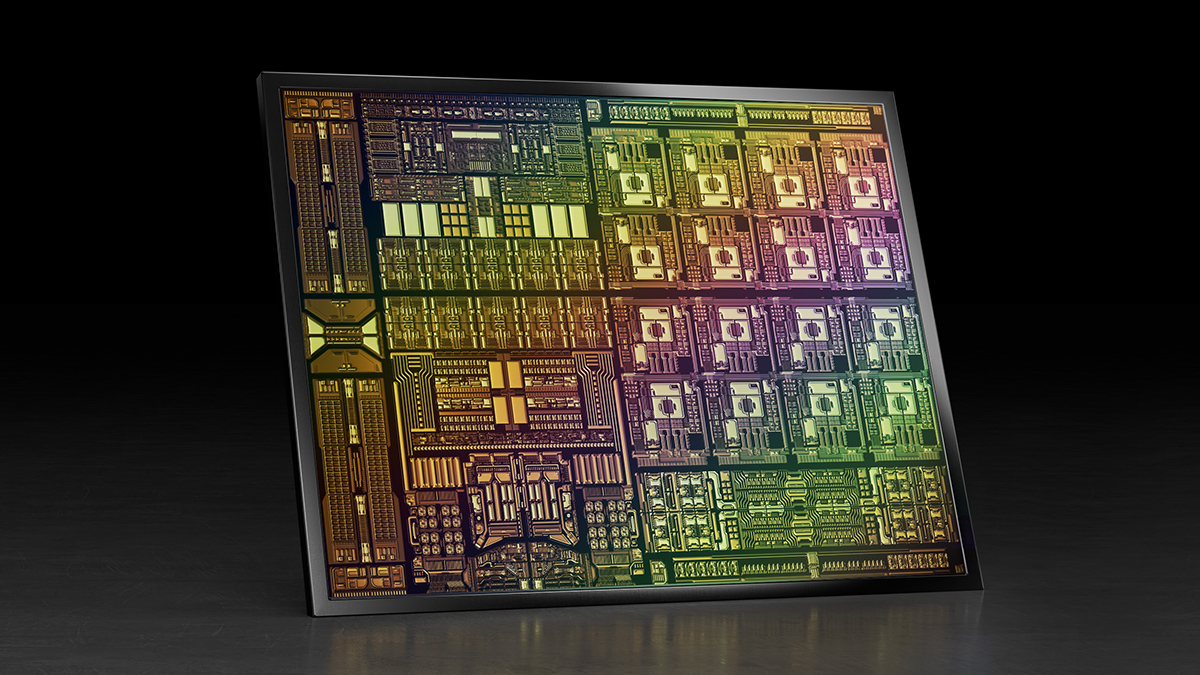

Bluefield, which NVIDIA refers to as a data processing unit (DPU) is hardware that offloads host server CPU workloads by taking on tasks like interfacing to storage devices, security checks, and network data transmission, letting server CPUs focus on compute and applications rather than security or networking functions. Dubbing it a "data centre-on-a-chip", Huang said: "Every single networking chip in the world will be a smart networking chip. It will be a programmable, accelerated infrastructure processor. I believe every single server node will have it... and it will offload about half of the software processing that's consuming data centers today."

Follow The Stack on LinkedIn

He added: "You don't want a whole bunch of bespoke custom gear inside a data center. You want to operate the data center with software, you want it to be software-defined. The software-defined data center movement enabled this one pane of glass -- a few IT managers orchestrating millions and millions of nodes of computers at one place. The software runs what used to be storage, networking, security, virtualisation, and all of that; all of those things have become a lot larger and a lot more intensive and it's consuming a lot of the data center...

"If you assume that it's a zero-trust data center, probably half of the CPU cores inside the data center are not running applications. That's kind of strange because you created the data center to run services and applications, which is the only thing that makes money... The other half of the computing is completely soaked up running the software-defined data center, just to provide for those applications. [That] commingles the infrastructure, the security plane and the application plane and exposes the data center to attackers. So you fundamentally want to change the architecture as a result of that; to offload that software-defined virtualisation and the infrastructure operating system, and the security services to accelerate it... to take that application and software and accelerate it using a form of accelerated computing. These things are fundamentally what BlueField is all about. BlueField-3 replaces approximately 300 CPU cores, to give you a sense of it."

"We continue to gain traction in inference with hyperscale and vertical industry customers across a broadening portfolio of GPUs," CFO Colette Kress said on the earnings call late Wednesday (May 26), adding: "We had record shipments of GPUs used for inference. Inference growth is driving not just the T4, which was up strongly in the quarter, but also the universal A100 Tensor Core GPU as well as the new Ampere architecture-based A10 and A30 GPUs, all excellent at training as well as inferencing."

The company's heavily contested $40 billion proposed acquisition of chipmaker Arm is on track, she claimed, saying "we are making steady progress in working with the regulators across key regions. We remain on track to close the transaction within our original timeframe of early 2022."

NVIDIA has also developed pre-trained model availability on the NVIDIA GPU Cloud registry, meaning developers can choose a pre-trained model and adapt it to fit their specific needs using NVIDIA TAO, the company's transfer learning software. This fine tunes the model with customer's own small data sets to get models accustomed to it "without the cost, time and massive data sets required to train a neural network from scratch." Once a model is optimized and ready for deployment, users can integrate it with a NVIDIA application framework that fits their use.

The company highlighted its "Jarvis framework" for interactive conversational AI, which is now GA and being used by customers like as T-Mobile and Snap as an example.