Countries around the world are scrambling to invest in sovereign AI infrastructure said NVIDIA CEO Jensen Huang – as news broke this week that OpenAI researchers were working on a project called Q* that could presage a sharp improvement in the ability of AI to solve mathematical and scientific problems; capabilities that if owned by a nation state could lead to huge competitive advantage on the global chessboard.

“Many countries are awakening to the need to invest in sovereign AI infrastructure to support economic growth and industrial innovation… nations can use their own data to train LLMs and support their local generative AI ecosystems” Huang told analysts on a Q3 earnings call – speaking after a European entity announced plans to build the world’s most powerful AI system, powered by nearly 24,000 of NVIDIA’s chips.

The supercomputer, JUPITER, based in Germany, is owned by the “EuroHPC Joint Undertaking”; a public-private partnership backed with €7 billion in European member state funding, and will focus on “material science, drug discovery, industrial engineering and quantum computing.”

It will feature 24,000 Grace Hopper "superchips" from NVIDIA – each of which has an astonishing 35,000 parts. As Huang noted on the call "even its passive components are incredible: high voltage parts, high frequency parts, high current parts; even the manufacturing of it is complicated, the testing of it is complicated, the shipping of it complicated and installation is complicated."

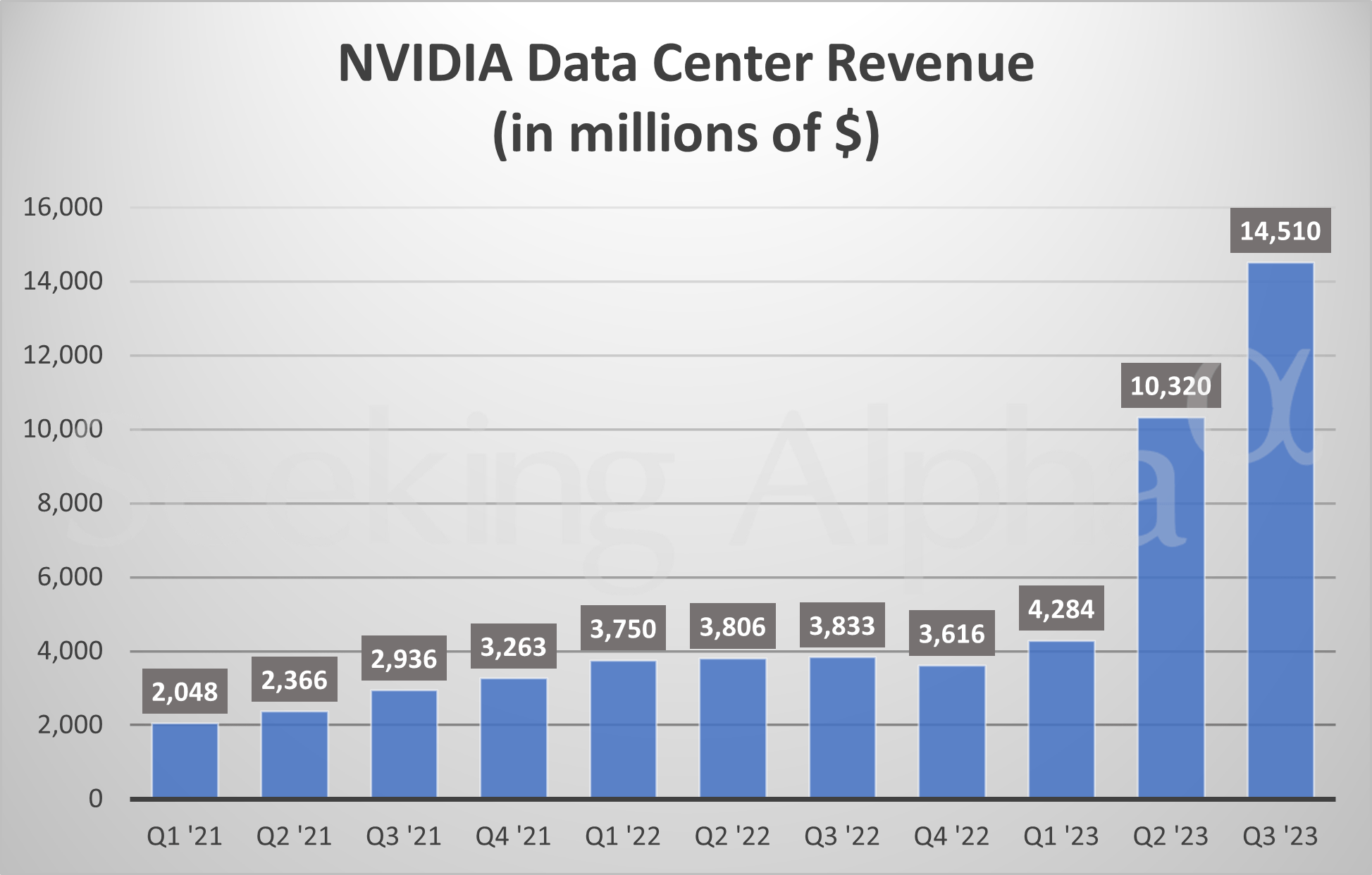

NVIDIA’s CEO was speaking as the company (which has been the single biggest beneficiary of demand for hardware to power AI capabilities) reported a 279% increase in data centre sales year-on-year for Q3 – with data centre revenues hitting a record $14.51 billion and the firm’s gross profit climbing from $3 billion for Q3 2022 to $13 billion this quarter.

Referring to the sovereign AI race and pointing to Europe's JUPITER, a new Bristol-based supercomputer in the UK and beyond, Huang said: “We are working with India's government… to boost their sovereign AI infrastructure. French private cloud provider, Scaleway, is building a regional AI cloud… to fuel advancement across France and Europe.”

He added: “National investment in compute capacity is a new economic imperative and serving the sovereign AI infrastructure market represents a multi-billion dollar opportunity over the next few years... the number of sovereign AI clouds that are being built is really quite significant.”

“All-in, we estimate that the combined AI compute capacity of all the supercomputers built on Grace Hopper across the U.S., Europe and Japan next year will exceed 200 exaflops with more wins to come” Huang said.

The private sector, of course, is already tearing ahead and NVIDIA is not just benefiting from GPU sales: its networking now has a $10 billion ARR run rate with InfiniBand salesup fivefold year-on-year. (Azure alone uses over 29,000 miles of InfiniBand cabling, enough to circle the globe).

Huang emphasised that InfiniBand is “not just the network, it’s also a computing fabric. We’ve put a lot of software-defined capabilities into the fabric including computation. We will do 40-point calculations and computation right on the switch, and right in the fabric itself…”

In a typically detail-rich Q&A (NVIDIA’s CEO gives good conference call) he also gave fresh detail on a newly announced “AI Foundry” model.

The company had announced that enterprise service on November 15, describing it as “collection of NVIDIA AI Foundation Models, NVIDIA NeMo™ framework and tools, and NVIDIA DGX™ Cloud AI supercomputing services… for creating custom generative AI models.”

That service is currently only available on Microsoft Azure and customers will need to be comfortable with that because, as Huang himself pointed out this week, companies “want their own proprietary AI. They can’t afford to outsource their intelligence and handout their data, and handout their flywheel for other companies to build the AI for them.”

How does that work, exactly, beyond the generic details the press release shared? Huang was happy to hold forth: “Our monetization model is that with each one of our partners they rent a sandbox on DGX Cloud, where we work together, they bring their data, they bring their domain expertise, we bring our researchers and engineers, we help them build their custom AI. We help them make that custom AI incredible. Then that custom AI becomes theirs. And they deploy it on the runtime that is enterprise-grade, enterprise-optimized or outperformance-optimized, runs across everything NVIDIA. We have a giant installed base in the cloud, on-prem, anywhere. It is constantly patched and optimized and supported…

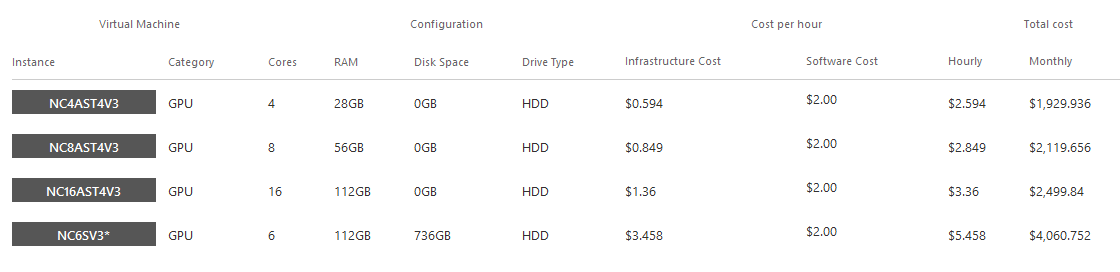

"[This] ‘NVIDIA AI Enterprise’ [offering] is $4,500 per GPU per year… Our business model is basically a license. Our customers with that basic license can build their monetization model on top of it…

“In a lot of ways we’re wholesale, they become retail.”