AMD has merged multiple AI teams into a single organisation under former Xilinx CEO Victor Peng as it continues to attack a burgeoning AI market across not just silicon but software, from data centre to edge.

The new AMD AI group has “responsibility for owning our end-to-end AI hardware strategy and driving development of a complete software ecosystem, including optimized libraries, models and framework expanding our full product portfolio” AMD CEO Dr Lisa Su confirmed on an earnings call late May 2, 2023.

“We are very excited about our opportunity in AI. This is our number one strategic priority, and we are engaging deeply across our customer set to bring joint solutions to the market… I look forward to sharing more about our AI progress… as we broaden our portfolio and grow the strategic part of our business,” Dr Su added.

Join peers following The Stack on LinkedIn

AMD received a boost earlier this year with the release of the popular machine learning PyTorch 2.0 framework which includes support for AMD Instinct and Radeon GPUs and continues to work on its own AI software.

The opportunity is across the enterprise data centre, cloud and embedded edge, she said, but “the beauty of particularly the cloud opportunity is it's not that many customers, and it's not that many workloads…

“We're working very, very closely with our customers on optimizing for a handful of [AI] workloads that generate significant volume. That gives us a very clear target for what winning is in the market.” she said.

Peng, a pioneer in the semiconductor space with several patents to his name, joined AMD with its $50 billion Xilinx acquisition in 2020. Prior to his 14 years at FPGA specialist Xilinx, Peng worked at AMD where he led the central silicon engineering team supporting graphics, game console products, and CPU chipsets.

AMD AI focus comes ahead of MI300 launch

The shift comes a year after AMD announced a unified software strategy called Unified AI Stack 2.0.

This aimed to start pulling together an expansive AMD AI software stack for CPUs that includes optimized inference models, compilers, libraries and runtimes for Linux and Windows, its open source ROCm platform for heavy lifting of parallel applications across CPUs and GPUs, and Xilinx’s Vitis platform for specialised AI chips.

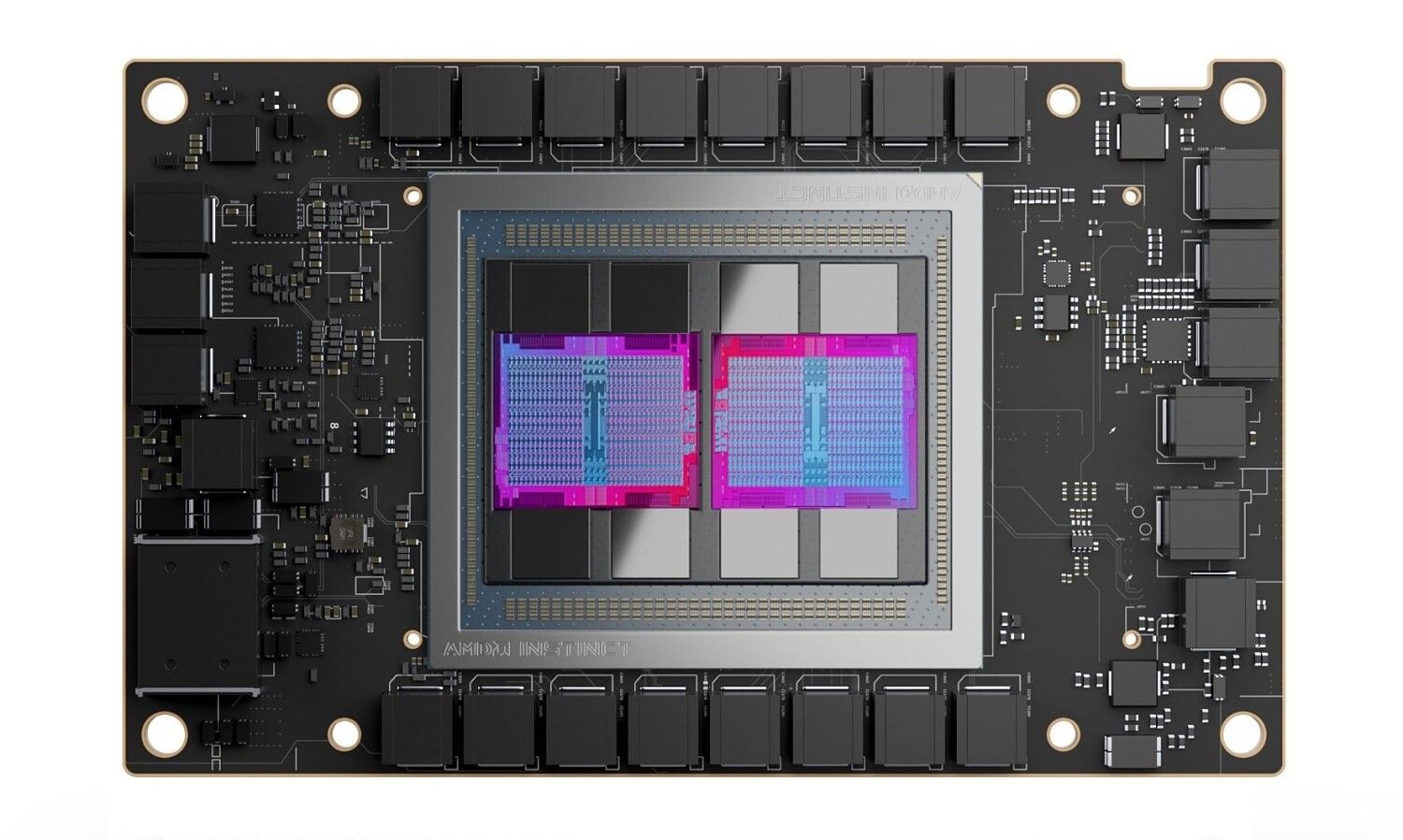

A key opportunity AMD sees in AI is for its MI300 data centre Accelerated Processing Unit (APU) that lands later this year. AMD first teased the MI300 (built on TSMC’s 5nm process) at its 2022 investor day, promising “greater than 8x increase in AI training performance compared to the AMD Instinct MI200 accelerator” and a “groundbreaking 3D chiplet design combining AMD CDNA 3 GPU, Zen 4 CPU, cache memory, and HBM chiplets [for] leadership memory bandwidth and application latency for AI training and HPC workloads.”

"MI300 is a game-changing design - the data center APU blends a total of 13 chiplets, many of them 3D-stacked, to create a chip with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and 8 stacks of HBM3. Overall the chip weighs in with 146 billion transistors, making it the largest chip AMD has pressed into production" as Toms Hardware's Paul Alcorn noted after laying his hands on one at CES earlier this year.

“With the recent interest in generative AI, I would say the pipeline for MI300 has expanded considerably here over the last few months, and we're excited about that” said AMD’s CEO on the Q1 earnings call.

“We've continued to do quite a bit of library optimization with MI250 and software optimization to really ensure that we could increase the overall performance and capabilities.

"MI300 looks really good" she said.

I think from everything that we see, the workloads have also changed a bit in terms of -- whereas a year ago, much of the conversation was primarily focused on training. Today, that has migrated to sort of large language model inferencing, which is particularly good for GPUs. So I think from an MI300 standpoint, we do believe that we will start ramping revenue in the fourth quarter with cloud AI customers and then it will be more meaningful in 2024” she added.

“ We're putting a lot more resources [into this].. taking our Xilinx and the overall AMD AI efforts and collapsing them into one organization that's primarily to accelerate our AI software work as well as platform work. So success for MI3100 is for sure, a significant part of sort of the growth in AI in the cloud” Dr Lisa Su added.

AMD's Q1 earnings saw it report revenues of $5.3 billion, down 9% and report a tumble in net income to a loss of $139 million, largely on acquisition costs. Non-GAAP net income was $970 million.