LLMs

Communicating RAG/ML is hard. Engineering, legal and communications need to sit down and thrash this out in a growing number of companies.

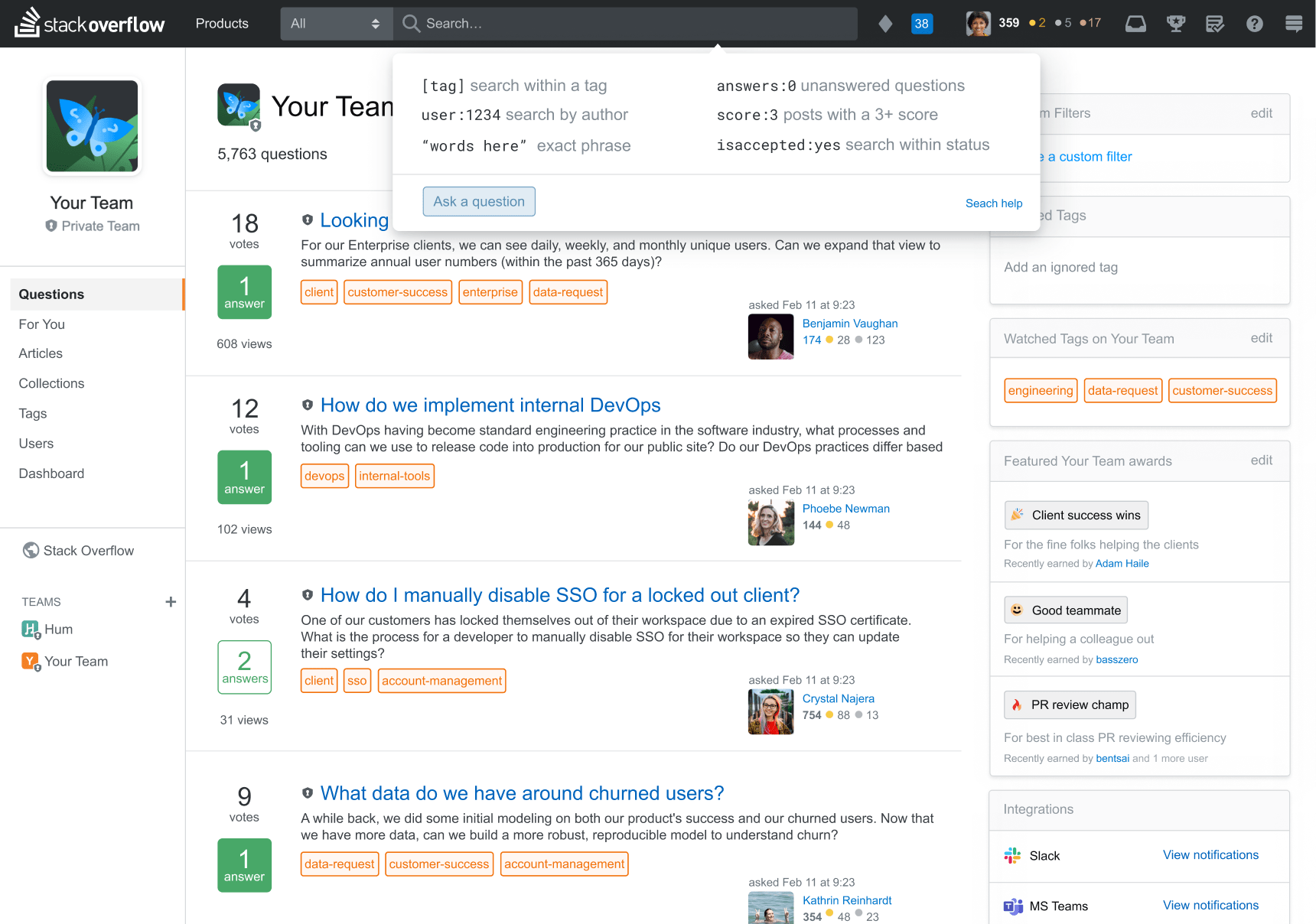

"To develop AI/ML models, our systems analyse Customer Data (e.g. messages, content and files) submitted to Slack..."

"For product experiences that tolerate such a level of errors, building with generative AI is refreshingly straightforward. But it also creates unattainable expectations..."

Adobe uses a "multi-layered, continuous review and moderation approach to block and remove content that violates Adobe’s policies"

Alphabet CEO: We see "clear paths to AI monetization". GCHQ Chief Data Scientist: Beware "automation bias"

Welcome to your latest episode of “is this exciting or is this mild AI exaggeration™"

"You can create a consumer, a brand strategist, a brand marketer, client, encoded with actual ground truth data, then critique the content that's been generated by the system with agents playing off against each other."