Look at Dael Williamson’s profile and you might wonder, briefly, how he wound up as a Chief Technology Officer: There’s the degree in molecular biology and the masters in structural biology and the research position in structural bioinformatics; here is someone perhaps trying to save the world, who has wound up in IT of all places. Where did it all go wrong, The Stack wants to ask. Perhaps it didn't: One can do a lot of good with data...

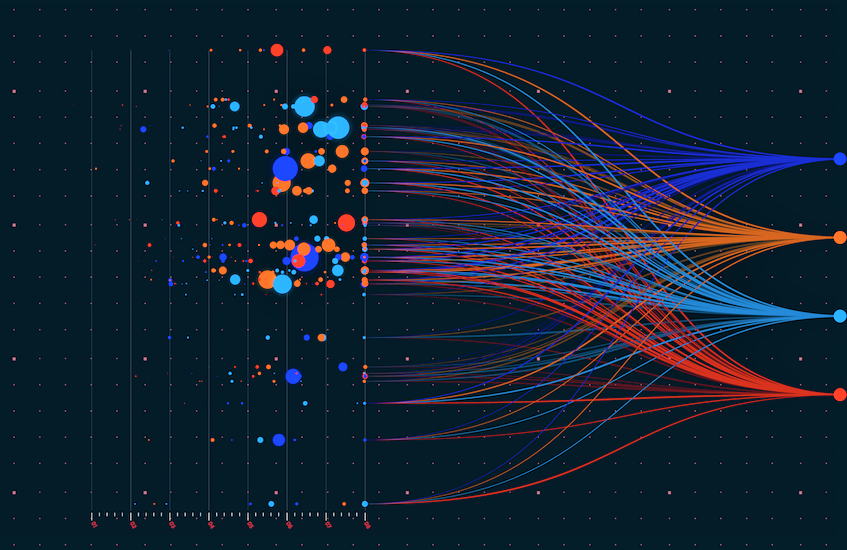

Williamson, who is CTO, EMEA at Databricks (he was previously European CTO at Avanade) says his data science journey started out in medical research, and he maintains a deep interest in working with Databricks’ customers in the life sciences space who are doing “fascinating things.” (The company recently released a "solution accelerator" to help life sciences organizations get started building a knowledge graph with sample data, prebuilt code, and step-by-step instructions, for example, that lets users ingest clinical trial data, give it semantics by structuring the data using the MeSH ontology, and then analyze it at scale using SPARQL queries.)

(In plain English, this lets researchers make use of a common language between different data sources with shared semantics, to retrieve hidden insights from structured and unstructured data.)

Databricks EMEA CTO Dael Williamson: "I had to wire stuff up..."

Rewinding the clock to the start of his career in his native South Africa, and Williamson says he was “focused on proteomics; less lab-based wet work, more focused on the predictive stuff. What we were doing was data science but before it was really a ‘thing’, with the application of drug discovery and industrial bioremediation.

"Even by today’s standards the datasets I was working with were insanely big and they were insanely unstructured; electron microscope images and so on. So I used to have to wire up stuff; there was a huge amount of plumbing and programming just to get a job started. I had a real shortage of compute and storage...”

Syncronistically, around the time he was wrenching on resources in Cape Town, over in UC Berkeley Databricks’ founders were trying to get the world to realise the powers of what is now Apache Spark – the open-source unified analytics engine for large-scale data processing. To say they ultimately succeeded is an understatement: Databricks launched as a provider of managed Spark services but is now an all singing help-you-with-your-data hub, offering data engineering, governance, learning, science, streaming, warehousing capabilities and more via its Databricks Lakehouse Platform – in August 2022 publicly hitting the magic $1 billion ARR mark to boot.

As Dael Williamson puts it: “When I first saw Spark, I knew exactly what it meant. As I went through my career, I was always a big fan of introducing Spark and expanding it into the cloud; I could see where that was going.”

European enterprise data maturity? “It’s a spectrum…”

We’re talking about the here-and-now though mostly of course and one thing The Stack is interested in from the Databricks EMEA CTO’s bird’s eye view position is how mature he’s finding most European companies Databricks is working with when it comes to innovating with data or even getting a grip on their data assets.

He says: “We have over 7,000 customers and some of them are digital natives that are still trying to figure out product distribution or market fit and others are 200-year-old enterprises that are trying to course-correct and find innovation at scale, so it’s a spectrum – and we see that spectrum across multiple dimensions. Maturity is one. And a lot [of data transformation projects] fail, which is a really fascinating thing. A lot of this has to do with the fact that their data is kind of sprawled; even small, emerging companies make a lot of reactive decisions to try and win business and build business, while a lot of established businesses are trying to figure out how to change the business while the plane is flying, without disrupting their revenue models…"

Join peers following The Stack on LinkedIn

He adds: “Data is very much that connective tissue that sits between business and technology, but a lot of companies really haven’t recognised how to unlock it [and] there’s a tax that happens as data moves from one system to another [amid this] heterogeneity that's happened in their complicated technology landscape.”

Pressure is mounting on data leaders, he says, not just to tidy up and start delivering insights from data, but to do it cheaply and efficiently: “The CFO is saying ‘I want to preserve margins’; in other words, TCO reduction. The business is saying ‘I want to make new revenue, because we're kind of getting pinched and I want to do it faster; so that’s an efficiency and consolidation exercise… it’s a lot of pressure all round.”

The focus from business leaders is on clear outcomes from data projects, in short, he says and with the economy taking a downturn, there’s less room for boundary-less data experimentation. Williamson points to British meal kit subscription service Gousto as one example of a recent customer success story. The company uses the Databricks Lakehouse Platform as its machine learning pipeline underpinning Gousto’s recommendation engine and says it has cut model deployment time sharply as well as making commercial savings in part through that improved efficiency.

(Gousto has said querying the data produced by customer orders and recommendations “was inherently tricky. Our data analysts had little to no visibility of this recommendation data… We used a number of model-building techniques [and] team had to use four data repositories to change the ML model. Using these different techniques and repositories was a clunky process…” It now runs everything – from ETL to collecting data to interactive dashboards to monitoring that data – via the open source Databricks Lakehouse.)

Databricks EMEA CTO Dael Williamson tells The Stack: “There’s a whole lot of inventory [and other data] that they ingest in real time and the data processing job on that has been cut from two hours to 15 seconds.

“They've seen 40% productivity benefits for their data engineering delivery, a 60% infrastructure cost reduction by embracing open source Lakehouse and doing a really heavy job in auditing and downgrading their own usage and looking for efficiencies; they’ve really leaned in to a resilient data strategy…”

Four tips on data strategy

His role often encompasses almost an advisory position, it sounds like and we ask what are some of the nudges he gives to clients when it comes to optimising their data strategy. He says: “Embracing open source is very much a leading indicator, because open source has a number of benefits. One, you're not locked into something; you're not paying tax every time you have to transform it [and you typically] find a team that can cover a broader spectrum [of capabilities] not driven by a single vendor. The second thing is a lot of them [clients] are moving to cloud because they want to get that benefit of he scale that cloud gives you.

“But there’s a big economic change there that’s not as simple as just capex vs opex; there’s also behavioural changes around how teams look at code; how much code costs to run and the discipline around engineering. [You also need to look out for] 'am I going to this cloud and creating something really complicated?' You could build a data platform in one of the hyperscalars with 15 different services -- but that's a lot of plumbing, a lot of wiring that you still have to do [as you come off-premises] and you're going from complicated to complicated.”

“You also need to consider portability strategy: Regulatory bodies in more regulated industries are moving in that direction, with cloud concentration and vendor lock-in regulations. Then there’s auditing and downgrading your usage. Cloud's not expensive if you're super efficient and super disciplined about how you choose to work with the cloud. You need to be mindful of continued optimisation and very strong engineering discipline.”

“Finally you need to ask ‘am I building a resilient data strategy?’ he concludes.

“ There’s so many cool things that come with a modern data platform, especially if you've removed a lot of the complications, [if you’ve] brought different silos together into one team with healthy technology; because if you have engineers working with data scientists working with analysts, in one team, you get cognitive diversity and can start to focus on business problems and run simulations based on different scenarios.”