NVIDIA CEO Jensen Huang said that he sees an explosion of AI-enabled robots coming, with robotics firms increasingly buying up its hardware.

Huang made the comments as the chipmaker reported blockbuster earnings of $22.1 billion for its fourth quarter; up 265% year-on-year.

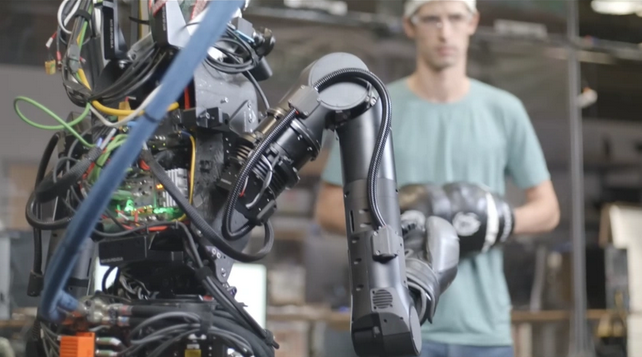

“There's just a giant suite of robotics companies that are emerging,” he said, answering a question about “product allocation” (who NVIDIA is selling to at the moment.) “There are warehouse robotics to surgical robotics to humanoid robotics, all kinds of really interesting robotics companies, agriculture robotics companies,” he responded on the call.

That would chime with the predictions of many like Gartner, which in December 2023 predicted that by 2028 “there will be more smart robots than frontline workers in manufacturing, retail and logistics…” as well justify the instincts of MongoDB co-founder Eliot Horowitz, who, seeing a market about to explode, set up a robotics software company, Viam.

“Inference workloads are off-the-charts”

It is, of course, data centres, including those of cloud service providers (CSPs) that have been the biggest buyers of NVIDIA’s hardware; spanning everything from GPUs to specialised high-throughput networking kit.

With many either already using or building their own hardware accelerators however, the company has been keen to sell into what Huang described as a “whole new category” of smaller cloud providers focused entirely on providing on-demand inference and training services via GPU-centric data centres. (The Stack previously touched on this below.)

See also: AI cloud provider CoreWeave raises at a $7 billion valuation, as NVIDIA backs buyers

Notably, meanwhile, NVIDIA’s software revenues hit a $1 billion run rate.

Accelerated computing requires a different software stack. For AI, for every domain “from data processing SQL data versus all the images and text and PDF, which is unstructured, to classical machine-learning to computer vision to speech to large-language models…” noted Huang.

That’s a huge opportunity for the company: “All of these things require different software stacks. That's the reason why NVIDIA has hundreds of libraries. If you don't have software, you can't open new markets. If you don't have software, you can't open and enable new applications.”

Enterprise companies don't have the engineering teams to be able to maintain and optimise their software stacks for many of these challenges, he suggested. “So we are going to do the management, the optimization, the patching, the tuning, the installed-base optimization for all of their software stacks. And we containerize them into our stack…”

The company sells that as NVIDIA AI Enterprise, which Huang described as an OS for AI and noted “we charge $4,500 per GPU per year.”

(If you thought AI hardware was a cash crop, in short, you ain’t seen nothing yet, was his suggestion and he wasn’t holding back: “My guess is that every enterprise in the world, every software enterprise company that are deploying software in all the clouds and private clouds and on-prem, will run on NVIDIA AI Enterprise, especially obviously for our GPUs. So this is going to likely be a very significant business over time.”)

LlamaParse, RAG and PDFs. A new toolkit lands

“Consumer internet companies have been early adopters of AI and represent one of our largest customer categories,” he added.

“Companies from search to e-commerce, social media, news and video services and entertainment are using AI for deep learning-based recommendation systems” Huang said, describing recommendation systems as “the single largest software engine on the planet” – these are moving from CPU-powered to GPU and AI-powered platforms he said.

“The amount of inference that we do is just off the charts now” the ever-engaging CEO said, referring to the process of using a trained model to make predictions on new data – as the company reported $60.9 billion in annual revenue, with earnings per share of $11.93, up 586%.

Supply chain constraints are improving, he added, whilst emphasising that the NVIDIA Hopper GPU alone has 35,000 parts (“these things are really complicated things we've built”). Cycle times are improving, he said amid rampant demand for the company’s hardware, which has powered much of the recent generative AI explosion: “We're going to continue to do our best. However, whenever we have new products, as you know, it ramps from zero to a very large number. And you can't do that overnight…”

NVIDIA starts shipping its new H200 "with initial shipments in the second quarter" said CFO Collete Kress. The new platform, which is based on its Hopper architecture and which features the H200 Tensor Core GPU and HBM3e memory (understood to be from Micron) on one platform delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A10.