AI-powered "kill chain execution", brought to you with a side order of prompt injection fears.

Read the full storyThe Stack

Interviews, insight, intelligence, and exclusive events for digital leaders.

All the latest

All the latest

3891 Posts

|

Government

|

Oct 13, 2025

And plays it cool, but yes, apparently it is a national security decision again.

|

mícrosoft

|

Oct 10, 2025

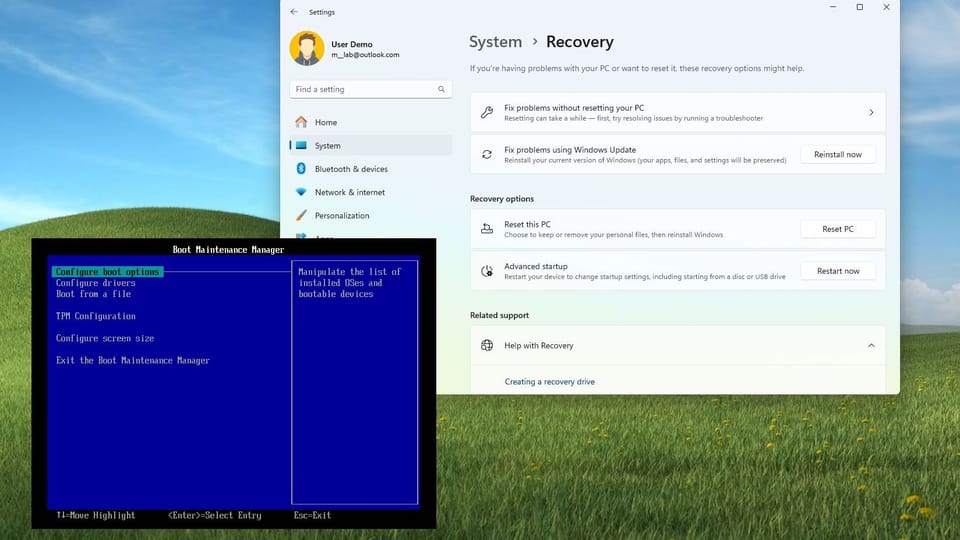

"We chose to build an entire execution environment from the ground up..."

Large Language Models can be backdoored by introducing just a limited number of “poisoned” documents during their training, a team of researchers from the UK’s Alan Turing Institute and partners found. “Injecting backdoors through data poisoning may be easier for large models than previously believed as the number of